WatchGuard aims to alleviate the performance and security issues presented by the broad adoption of HTTPS with the M500 Unified Threat Management Appliance.

HTTPS has become the standard bearer for Web traffic, thanks to privacy concerns, highly publicized network breaches and increased public demand for heightened Web security.

While HTTPS does a great job of encrypting what used to be open Web traffic, the technology does have some significant implications for those looking to keep networks secure and protected from threats.

For example, many enterprises are leveraging unified threat management (UTM) appliances to prevent advanced persistent threats (APTs), viruses, data leakage and numerous other threats from compromising network security. However, HTTPS has the ability to hide traffic via encryption from those UTMs and, in turn, nullifies many of the security features of those devices.

That situation has forced appliance vendors to incorporate a mechanism that decrypts HTTPS traffic and examine the data payloads for problems. On the surface, that may sound like a solution to what should never have been a problem to begin with but, in fact, has created additional pain points for network managers.

Those pain points come in the form of throughput and latency, where a UTM now has to deal with encrypted traffic from hundreds or even thousands of users, straining application-specific ICs (ASICs) to the breaking point and severely degrading the performance of network connections. What’s more, the situation is only bound to get worse as more and more Websites adopt HTTPS and rely on the Secure Sockets Layer (SSL) protocol to keep data encrypted and secure from unauthorized decryption.

Simply put, encryption hampers a UTM’s ability to scan for viruses, spear-phishing attacks, APTs, SQL injection and data leakage, and reduces URL filtering capabilities.

WatchGuard Firebox M500 Tackles the Encryption ConundrumWatchGuard Technologies, based in Seattle, has been a player in the enterprise security space for some 20 years and has developed numerous security solutions, appliances and devices to combat the ever-growing threats presented by connectivity to the world at large.

The company released the Firebox M500 at the end of November 2014 to address the ever-growing complexity that encryption has brought to enterprise security. While encryption has proven to be very beneficial for enterprise networks trying to protect privacy and prevent eavesdropping, it has also presented a dark side, where malware can be hidden within network traffic and only discovered at the endpoint, often too late.

The Firebox M500 pairs advanced processing power (in the form of multi-core Intel processors) with advanced heuristics to decrypt traffic and examine it for problems, without significantly impacting throughput or hampering latency. The M500 was designed from the outset to deal with SSL and open (clear) traffic using the same security technologies, bringing a cohesive approach to the multitude of security functions the device offers.

The Firebox M500 offers the following security services:1. APT Blocker: Leverages a cloud-based service featuring a combination of sandboxing and full system emulation to detect and block APTs.

2. Application Control: Allows administrators to keep unproductive, inappropriate, and dangerous applications off limits from end users.

3. Intrusion Prevention Service (IPS): Offers in-line protection from malicious exploits, including buffer overflows, SQL injections and cross-site scripting attacks.

4. WebBlocker: Controls access via policies to sites that host objectionable material or pose network security risks.

5. Gateway AntiVirus (GAV): In-line scan of traffic on all major protocols to stop threats.

6. spamBlocker delivers continuous protection from unwanted and dangerous email.

7. Reputation-enabled defense: Uses cloud-based reputation lookup to promote safer Web surfing.

8. Data loss prevention: Inspects data in motion for corporate policy violations.

WatchGuard uses a subscription-based model that allows users to purchase features based on subscription and license terms. This model creates an opportunity for network administrators to pick and choose only the security services needed or roll out security services in a staggered fashion to ease deployment.

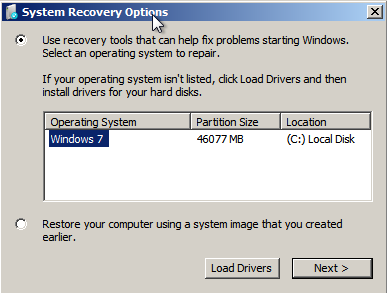

Installation and SetupThe Firebox M500 is housed in a 1u, red metal box that features six 1000/100/10 Ethernet ports, two USB ports, a Console port and a pair of optionally configurable small-form-factor pluggable ports. Under the hood resides an Intel Pentium G3420 processor and 8GB of RAM, as well as the company’s OS, FireWare 11.9.4.

The device uses a “man-in-the-middle” methodology to handle HTTPS traffic, allowing it to decrypt and encrypt traffic destined for endpoints on the network.

That man-in-the-middle approach ensures that all HTTPS (or SSL certificate-based traffic) must pass through the device and become subject to the security algorithms employed. This, in turn, creates an environment where DLP, AV, APT protection and other services can function without hindrance.

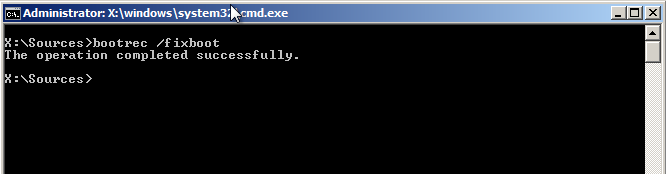

Initial deployment consists of little more than placing the M500 in an equipment rack and plugging in the appropriate cables. The device defaults to an open mode for outboard connections that allows all outbound traffic to enable administrators to quickly plug it in without much disruption.

On the other hand, inbound traffic will be blocked until policies are defined to handle that traffic. This can potentially cause some disruption to remote workers or external services until the device is configured.

A configuration wizard guides administrators through the steps to set up the basic security features. While the wizard does a decent job of preventing administrators from disrupting connectivity, there are settings that one must be keenly aware of to maintain efficient performance. The wizard also handles some of the more mundane housekeeping tasks, such as installing licenses, subscriptions, network configurations and so on.

To truly appreciate how the Firebox M500 works and to fully comprehend the complexity of the appliance, one must delve into policy creation and definition. Almost everything that the device does is driven by definable policies that require administrators to carefully consider what traffic should be allowed, should be examined and should be blocked.

Defining policies ranges from the simplistic to the very complex. For example, an administrator can define a policy that blocks Web traffic based on content in a few simple steps. All it takes is clicking on policy creation, selecting a set of predefined rules, applying those rules to users/ports/etc. and then clicking off on the types of content that are not allowed (such as botnets, keyloggers, malicious links, fraud, phishing, etc.).

Policy definition can also be hideously complex, such as with HTTPS proxy definition and the associated certificate management. Although the device steps you through much of the configuration, administrators will have to be keenly aware of exceptions that must be white-listed (depending on their business environment), privacy concerns and a plethora of other issues.

That said, complexity is inherent when it comes to controlling that type of traffic, and introducing simplicity would more than likely unintentionally create either false positives or limit full protection.

Naturally, performance is a key concern when dealing with encrypted traffic, and WatchGuard has addressed that concern by leveraging Intel processors, instead of creating custom ASICs to handle the traffic.

Independent performance testing by Miercom Labs shows that WatchGuard made the right choice by choosing CISC-based CPUs instead of taking a RISC approach. Miercom's testing report shows that the M500 is capable of 5,204M bps of throughput with Firewall services enabled.

For environments that will deploy multiple Firebox M500s across different locations, WatchGuard offers the WatchGuard System Manager, which uses templates for centralized management and offers the ability to distribute policies to multiple devices. That eliminates having to manage each M500 individually, beyond initially plugging in the device.

WatchGuard offers a deployment tool called RapidDeploy, which provides the ability to install a preconfigured/predefined image and associated policies on a freshly deployed device. Simply put, all anyone has to do is plug in the appliance and ensure there is connectivity, and an administrator located anywhere can set up the device in a matter of moments. That proves to be an excellent capability for those managing branch offices, remote workers, multiple sites or distributed enterprises.

The M500 starts at a MSRP of $6,190, (including one year of security services in a discounted bundle). APT services for a year add another $1,375, while a year's worth of DLP services adds another $665. The company offers significant discounts for multiyear subscriptions and also supports a vibrant reseller channel.

While the WatchGuard Firebox M500 may not be the easiest security appliance to deploy, it does offer all the features almost any medium enterprise would want. It also offers a solution to one of the most critical pain points faced by network administrators today—keeping systems secure, even when dealing with encrypted traffic.