The PowerShell script discussed here allows you to create new Active Directory (AD) users, one of the most common tasks that IT admins tend to automate when it comes to employee provisioning. The reason is that this function meets all of the criteria necessary for automation. It’s a task that requires a lot of different, error-prone steps, such as ensuring user accounts meet a particular standard, creating a home folder in a certain way, creating a mailbox, and so on.

In addition, it’s a task that admins are going to repeat many times, since an organization continually hires new employees. I thought this was a great task to demonstrate typical steps that you might take when embarking on a new script.

To get started, I’m going to assume a few prerequisites. First, I’m going to presume you have at least PowerShell v4 and also have the Active Directory module installed. The module comes as part of the Remote Server Administration Tools pack. I’m also going to assume that the machine you’ll be working is a part of a domain, that you have the required permission to create an Active Directory user account, and that the user’s home folder resides on a file server somewhere.

Because each organization’s process is going to be a little different, I’m also going to keep this as generic as possible by first demonstrating how to create an Active Directory user account based on a company standard and create their home folder somewhere. This is probably the minimum that you’ll need to do.

However, once you get the basics of script building, you’ll see just how easy it is to script other things (adding something to an HR application, creating an Exchange mailbox, etc.).

Planning employee provisioning

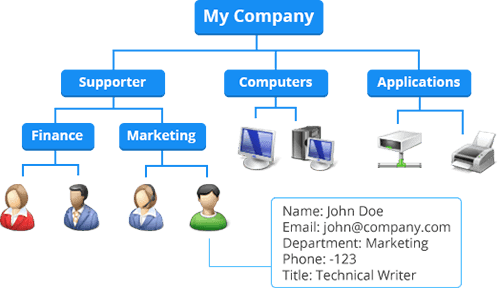

Let’s first break down each component of the goal I want to accomplish and how I intend to make it happen. By the end of script execution, I want to end up with a single AD user account and a separate folder created on a file server with appropriate permissions. To do this, I’ll need to define what exactly each of these two tasks looks like.

For example, when creating my AD user account, here are a few questions to ask yourself.

- In what organizational unit should it go?

- In what (if any) groups should it go?

- Is there a standard user group in which all user accounts go?

- Are there different groups in which a user account might go, depending on their department?

- What attributes need to be set at creation time?

- What should the username be? Does it have to follow some company standard?

- Where should the folder be created?

- What should the name of the folder be?

- What kind of permissions should the folder have?

Now that we have some rough intentions outlined, let’s answer each question before coding. Don’t worry. '

We’ll get to the code in a minute.

- In what organizational unit should it go? – Corporate Users

- In what (if any) groups should it go?

- Is there a standard user group in which all user accounts go? XYZCompany

- Are there different groups in which a user account might go, depending on their department? Match group name with the department.

- What attributes need to be set at creation time?

- First Name

- Last Name

- Title

- Department

- Initials

- Change Password at Logon

- What should the username be? Does it have to follow some company standard? It should be first initial, last name. If that username is already taken, it should be first initial, middle initial, and last name. If that’s taken, error out.

- Where should the folder be created? \\MEMBERSRV1\Users

- What should the name of the folder be? AD username

Creating new AD users with PowerShell

We’ll first create the script and call it New-Employee.ps1. Because a lot of information will change for each employee, we need to create some parameters and dynamically pass them to the script whenever it is run. I’ll create the following variables as parameters:- First Name

- Last Name

- Middle Initial

- Location (for the OU)

- Department

- Title

- Default Group

- Default Password

- Base Home Folder Path

param (

[Parameter(Mandatory,ValueFromPipelineByPropertyname)]

[ValidateNotNullOrEmpty()]

[string]$FirstName,

[Parameter(Mandatory,ValueFromPipelineByPropertyname)]

[ValidateNotNullOrEmpty()]

[string]$LastName,

[Parameter(Mandatory,ValueFromPipelineByPropertyname)]

[ValidateNotNullOrEmpty()]

[string]$MiddleInitial,

[Parameter(Mandatory,ValueFromPipelineByPropertyname)]

[ValidateNotNullOrEmpty()]

[string]$Department,

[Parameter(Mandatory,ValueFromPipelineByPropertyname)]

[ValidateNotNullOrEmpty()]

[string]$Title,

[Parameter(ValueFromPipelineByPropertyname)]

[ValidateNotNullOrEmpty()]

[string]$Location = 'OU=Corporate Users',

[Parameter()]

[ValidateNotNullOrEmpty()]

[string]$DefaultGroup = 'XYZCompany',

[Parameter()]

[ValidateNotNullOrEmpty()]

[string]$DefaultPassword = 'p@$$w0rd12345', ## Don't do this...really

[Parameter()]

[ValidateScript({ Test-Path -Path $_ })]

[string]$BaseHomeFolderPath = '\\MEMBERSRV1\Users'

)

Next, I'll need to figure out what the username will be based on our defined company standard and verify the home folder doesn't exist yet.

## Find the distinguished name of the domain the current computer is a part of.

$DomainDn = (Get-AdDomain).DistinguishedName

## Define the 'standard' username (first initial and last name)

$Username = "$($FirstName.SubString(0, 1))$LastName"

#region Check if an existing user already has the first initial/last name username taken

Write-Verbose -Message "Checking if [$($Username)] is available"

if (Get-ADUser -Filter "Name -eq '$Username'")

{

Write-Warning -Message "The username [$($Username)] is not available. Checking alternate..."

## If so, check to see if the first initial/middle initial/last name is taken.

$Username = "$($FirstName.SubString(0, 1))$MiddleInitial$LastName"

if (Get-ADUser -Filter "Name -eq '$Username'")

{

throw "No acceptable username schema could be created"

}

else

{

Write-Verbose -Message "The alternate username [$($Username)] is available."

}

}

else

{

Write-Verbose -Message "The username [$($Username)] is available"

}

#endregion

This part is nice because it will automatically figure out the username to use.

![Script output]()

Next, we’ll ensure the OU and group that we’ll be using exist.

#region Ensure the OU the user's going into exists

$ouDN = "$Location,$DomainDn"

if (-not (Get-ADOrganizationalUnit -Filter "DistinguishedName -eq '$ouDN'"))

{

throw "The user OU [$($ouDN)] does not exist. Can't add a user there"

}

#endregion

I'll also verify the groups exists.

#region Ensure the group the user's going into exists

if (-not (Get-ADGroup -Filter "Name -eq '$DefaultGroup'"))

{

throw "The group [$($DefaultGroup)] does not exist. Can't add the user into this group."

}

if (-not (Get-ADGroup -Filter "Name -eq '$Department'"))

{

throw "The group [$($Department)] does not exist. Can't add the user to this group."

}

#endregion

And for the end of the validation phase, ensure the home folder doesn't already exist.

#region Ensure the home folder to create doesn't already exist

$homeFolderPath = "$BaseHomeFolderPath\$UserName"

if (Test-Path -Path $homeFolderPath) {

throw "The home folder path [$homeFolderPath] already exists."

}

#endregion

Script output

Next, we’ll ensure the OU and group that we’ll be using exist.

#region Ensure the OU the user's going into exists

$ouDN = "$Location,$DomainDn"

if (-not (Get-ADOrganizationalUnit -Filter "DistinguishedName -eq '$ouDN'"))

{

throw "The user OU [$($ouDN)] does not exist. Can't add a user there"

}

#endregion

I'll also verify the groups exists.

#region Ensure the group the user's going into exists

if (-not (Get-ADGroup -Filter "Name -eq '$DefaultGroup'"))

{

throw "The group [$($DefaultGroup)] does not exist. Can't add the user into this group."

}

if (-not (Get-ADGroup -Filter "Name -eq '$Department'"))

{

throw "The group [$($Department)] does not exist. Can't add the user to this group."

}

#endregion

And for the end of the validation phase, ensure the home folder doesn't already exist.

#region Ensure the home folder to create doesn't already exist

$homeFolderPath = "$BaseHomeFolderPath\$UserName"

if (Test-Path -Path $homeFolderPath) {

throw "The home folder path [$homeFolderPath] already exists."

}

#endregion

We can now create the user account per company standards, add it to the group, and create the home folder.

#region Create the new user

$NewUserParams = @{

'UserPrincipalName' = $Username

'Name' = $Username

'GivenName' = $FirstName

'Surname' = $LastName

'Title' = $Title

'Department' = $Department

'SamAccountName' = $Username

'AccountPassword' = (ConvertTo-SecureString $DefaultPassword -AsPlainText -Force)

'Enabled' = $true

'Initials' = $MiddleInitial

'Path' = "$Location,$DomainDn"

'ChangePasswordAtLogon' = $true

}

Write-Verbose -Message "Creating the new user account [$($Username)] in OU [$($ouDN)]"

New-AdUser @NewUserParams

#endregion

#region Add user to groups

Write-Verbose -Message "Adding the user account [$($Username)] to the group [$($DefaultGroup)]"

Add-ADGroupMember -Members $Username -Identity $DefaultGroup

Write-Verbose -Message "Adding the user account [$($Username)] to the group [$($Department)]"

Add-ADGroupMember -Members $Username -Identity $Department

#endregion

#region Create the home folder and set permissions

Write-Verbose -message "Creating the home folder [$homeFolderPath]..."

$null = mkdir $homeFolderPath

Notice throughout this sample script that I was breaking things down into regions. Using regions is an excellent way to separate out high level tasks in a large script.

Now that you’ve done all the hard work and defined the rules, the rest is easy. You can now create as many users as you’d like that all follow the exact same pattern.

You might be interested in reading how to find active directory users with empty password using powershell script

#region Create the new user

$NewUserParams = @{

'UserPrincipalName' = $Username

'Name' = $Username

'GivenName' = $FirstName

'Surname' = $LastName

'Title' = $Title

'Department' = $Department

'SamAccountName' = $Username

'AccountPassword' = (ConvertTo-SecureString $DefaultPassword -AsPlainText -Force)

'Enabled' = $true

'Initials' = $MiddleInitial

'Path' = "$Location,$DomainDn"

'ChangePasswordAtLogon' = $true

}

Write-Verbose -Message "Creating the new user account [$($Username)] in OU [$($ouDN)]"

New-AdUser @NewUserParams

#endregion

#region Add user to groups

Write-Verbose -Message "Adding the user account [$($Username)] to the group [$($DefaultGroup)]"

Add-ADGroupMember -Members $Username -Identity $DefaultGroup

Write-Verbose -Message "Adding the user account [$($Username)] to the group [$($Department)]"

Add-ADGroupMember -Members $Username -Identity $Department

#endregion

#region Create the home folder and set permissions

Write-Verbose -message "Creating the home folder [$homeFolderPath]..."

$null = mkdir $homeFolderPath

Notice throughout this sample script that I was breaking things down into regions. Using regions is an excellent way to separate out high level tasks in a large script.

Now that you’ve done all the hard work and defined the rules, the rest is easy. You can now create as many users as you’d like that all follow the exact same pattern.

You might be interested in reading how to find active directory users with empty password using powershell script

Notice the Reset password option added by the uReset client

Notice the Reset password option added by the uReset client