Unlimited cellular data is tough to avail. Keep an eye on how much data you’re using to avoid paying overage fees or having your data speed throttled down to a trickle for the rest of your billing cycle. In an ideal world, you wouldn’t have to micromanage any of this stuff. But we don’t all live in that world yet, and there are many ways to reduce the data your phone uses.

How to Check Your Data Usage

Before anything else, you need to check your data usage. If you don’t know what your typical usage looks like, you have no idea how mildly or severely you need to modify your data consumption patterns.

You can get a rough estimate of your data usage using your cellular service calculator app, but the best thing to do is actually check your usage over the past few months.

Unlimited cellular data is tough to avail. Keep an eye on how much data you’re using to avoid paying overage fees or having your data speed throttled down to a trickle for the rest of your billing cycle. In an ideal world, you wouldn’t have to micromanage any of this stuff. But we don’t all live in that world yet, and there are many ways to reduce the data your phone uses.

How to Check Your Data Usage

Before anything else, you need to check your data usage. If you don’t know what your typical usage looks like, you have no idea how mildly or severely you need to modify your data consumption patterns.

You can get a rough estimate of your data usage using your cellular service calculator app, but the best thing to do is actually check your usage over the past few months.

The easiest way to check past data usage is to log into the web portal of your cellular provider (or check your paper bills) and look at what your data usage is. If you’re routinely coming in way under your data cap, you may wish to contact your provider and see if you can switch to a less expensive data plan. If you’re coming close to the data cap or exceeding it, you will definitely want to keep reading.

You can also check recent cellular data usage on your iPhone. Head to Settings > Cellular. Scroll down and you’ll see an amount of data displayed under “Cellular Data Usage” for the “Current Period.”

This screen is very confusing, so don’t panic if you see a very high number! This period doesn’t automatically reset every month, so the data usage you see displayed here may be a total from many months. This amount only resets when you scroll to the bottom of this screen and tap the “Reset Statistics” option. Scroll down and you’ll see when you last reset the statistics.

If you want this screen to show a running total for your current cellular billing period, you’ll need to visit this screen on the day your new billing period opens every month and reset the statistics that day. There’s no way to have it automatically reset on a schedule every month. Yes, it’s a very inconvenient design.

How to Keep Your Data Use in Check

So now that you know how much you’re using, you probably want to know how to make that number smaller. Here are a few tips for restricting your data usage on iOS.

Monitor and Restrict Data Usage, App by App

Check the amount of cellular data used by your apps for the period since you’ve reset them on the Settings > Cellular screen. This will tell you exactly which apps are using that data–either while you’re using them, or in the background. Be sure to scroll down to the bottom to see the amount of data used by the “System Services” built into iOS.

A lot of those apps may have their own built-in settings to restrict data usage–so open them up and see what their settings offer.

For example, you can prevent the App Store from automatically downloading content and updates while your iPhone is on cellular data, forcing it to wait until you’re connected to a Wi-Fi network. Head to Settings > iTunes & App Stores and disable the “Use Cellular Data” option if you’d like to do this.

If you use the built-in Podcasts app, you can tell it to only download new episodes on Wi-Fi. Head to Settings > Podcasts and enable the “Only Download on Wi-Fi” option.

Many other apps (like Facebook) have their own options for minimizing what they do with cellular data and waiting for Wi-Fi networks. To find these options, you’ll generally need to open the specific app you want to configure, find its settings screen, and look for options that help you control when the app uses data.

If an app doesn’t have those settings, though, you can restrict its data usage from that Settings > Cellular screen. Just flip the switch next to an app, as shown below. Apps you disable here will still be allowed to use Wi-Fi networks, but not cellular data. Open the app while you only have a cellular data connection and it will behave as if it’s offline.

You’ll also see how much data is used by “Wi-Fi Assist” at the bottom of the Cellular screen. This feature causes your iPhone to avoid using Wi-Fi and use cellular data if you’re connected to a Wi-Fi network that isn’t working well. If you’re not careful and have a limited data plan, Wi-Fi Assist could eat through that data. You can disable Wi-FI Assist from this screen, if you like.

Disable Background App Refresh

Since iOS 7, Apple has allowed apps to automatically update and download content in the background. This feature is convenient, but can harm battery life and cause apps to use cellular data in the background, even while you’re not actively using them. Disable background app refresh and an app will only use data when you open it, not in the background.

To control which apps can do this, head to Settings > General > Background App Refresh. If you don’t want an app refreshing in the background, disable the toggle next to it. If you don’t want any apps using data in the background, disable the “Background App Refresh” slider at the top of the screen entirely.

Disabling push notifications can also save a bit of data, although push notifications are rather tiny.

Disable Mail, Contacts, and Calendar Sync

By default, your iPhone will automatically grab new emails, contacts, and calendar events from the internet. If you use a Google account, it’s regularly checking the servers for new information.

If you’d rather check your email on your own schedule, you can. Head to Settings > Mail > Accounts > Fetch New Data. You can adjust options here to get new emails and other data “manually.” Your phone won’t download new emails until you open the Mail app.

Cache Data Offline Whenever You Can

Prepare ahead of time, and you won’t need to use quite as much data. For example, rather than streaming music in an app like Spotify (or other music services), download those music files for offline use using Spotify’s built-in offline features. Rather than stream podcasts, download them on Wi-Fi before you leave your home. If you have Amazon Prime or YouTube Red, you can download videos from Amazon or YouTube to your phone and watch them offline.

If you need maps, tell Google Maps to cache maps for your local area offline and possibly even provide offline navigation instructions, saving you the need to download map data. Think about what you need to do on your phone and figure out if there’s a way to have your phone download the relevant data ahead of time.

Disable Cellular Data Completely

For an extreme solution, you can head to the Cellular screen and toggle the Cellular Data switch at the top to Off. You won’t be able to use cellular data again until you re-enable it. This may be a good solution if you need to use cellular data only rarely, or if you’re nearing the end of the month and you want to avoid potential overage charges.

You can also disable cellular data while roaming from here. Tap “Cellular Data Options” and you can choose to disable “Data Roaming”, if you like. Your iPhone won’t use data on potentially costly roaming networks when you’re traveling, and will only use data when connected to your carrier’s own network.

You don’t have to perform all of these tips, but each of them can help you stretch that data allowance. Reduce wasted data and you can use the rest for things you actually care about.

ustify;">

The easiest way to check past data usage is to log into the web portal of your cellular provider (or check your paper bills) and look at what your data usage is. If you’re routinely coming in way under your data cap, you may wish to contact your provider and see if you can switch to a less expensive data plan. If you’re coming close to the data cap or exceeding it, you will definitely want to keep reading.

You can also check recent cellular data usage on your iPhone. Head to Settings > Cellular. Scroll down and you’ll see an amount of data displayed under “Cellular Data Usage” for the “Current Period.”

This screen is very confusing, so don’t panic if you see a very high number! This period doesn’t automatically reset every month, so the data usage you see displayed here may be a total from many months. This amount only resets when you scroll to the bottom of this screen and tap the “Reset Statistics” option. Scroll down and you’ll see when you last reset the statistics.

If you want this screen to show a running total for your current cellular billing period, you’ll need to visit this screen on the day your new billing period opens every month and reset the statistics that day. There’s no way to have it automatically reset on a schedule every month. Yes, it’s a very inconvenient design.

How to Keep Your Data Use in Check

So now that you know how much you’re using, you probably want to know how to make that number smaller. Here are a few tips for restricting your data usage on iOS.

Monitor and Restrict Data Usage, App by App

Check the amount of cellular data used by your apps for the period since you’ve reset them on the Settings > Cellular screen. This will tell you exactly which apps are using that data–either while you’re using them, or in the background. Be sure to scroll down to the bottom to see the amount of data used by the “System Services” built into iOS.

A lot of those apps may have their own built-in settings to restrict data usage–so open them up and see what their settings offer.

For example, you can prevent the App Store from automatically downloading content and updates while your iPhone is on cellular data, forcing it to wait until you’re connected to a Wi-Fi network. Head to Settings > iTunes & App Stores and disable the “Use Cellular Data” option if you’d like to do this.

If you use the built-in Podcasts app, you can tell it to only download new episodes on Wi-Fi. Head to Settings > Podcasts and enable the “Only Download on Wi-Fi” option.

Many other apps (like Facebook) have their own options for minimizing what they do with cellular data and waiting for Wi-Fi networks. To find these options, you’ll generally need to open the specific app you want to configure, find its settings screen, and look for options that help you control when the app uses data.

If an app doesn’t have those settings, though, you can restrict its data usage from that Settings > Cellular screen. Just flip the switch next to an app, as shown below. Apps you disable here will still be allowed to use Wi-Fi networks, but not cellular data. Open the app while you only have a cellular data connection and it will behave as if it’s offline.

You’ll also see how much data is used by “Wi-Fi Assist” at the bottom of the Cellular screen. This feature causes your iPhone to avoid using Wi-Fi and use cellular data if you’re connected to a Wi-Fi network that isn’t working well. If you’re not careful and have a limited data plan, Wi-Fi Assist could eat through that data. You can disable Wi-FI Assist from this screen, if you like.

Disable Background App Refresh

Since iOS 7, Apple has allowed apps to automatically update and download content in the background. This feature is convenient, but can harm battery life and cause apps to use cellular data in the background, even while you’re not actively using them. Disable background app refresh and an app will only use data when you open it, not in the background.

To control which apps can do this, head to Settings > General > Background App Refresh. If you don’t want an app refreshing in the background, disable the toggle next to it. If you don’t want any apps using data in the background, disable the “Background App Refresh” slider at the top of the screen entirely.

Disabling push notifications can also save a bit of data, although push notifications are rather tiny.

Disable Mail, Contacts, and Calendar Sync

By default, your iPhone will automatically grab new emails, contacts, and calendar events from the internet. If you use a Google account, it’s regularly checking the servers for new information.

If you’d rather check your email on your own schedule, you can. Head to Settings > Mail > Accounts > Fetch New Data. You can adjust options here to get new emails and other data “manually.” Your phone won’t download new emails until you open the Mail app.

Cache Data Offline Whenever You Can

Prepare ahead of time, and you won’t need to use quite as much data. For example, rather than streaming music in an app like Spotify (or other music services), download those music files for offline use using Spotify’s built-in offline features. Rather than stream podcasts, download them on Wi-Fi before you leave your home. If you have Amazon Prime or YouTube Red, you can download videos from Amazon or YouTube to your phone and watch them offline.

If you need maps, tell Google Maps to cache maps for your local area offline and possibly even provide offline navigation instructions, saving you the need to download map data. Think about what you need to do on your phone and figure out if there’s a way to have your phone download the relevant data ahead of time.

Disable Cellular Data Completely

For an extreme solution, you can head to the Cellular screen and toggle the Cellular Data switch at the top to Off. You won’t be able to use cellular data again until you re-enable it. This may be a good solution if you need to use cellular data only rarely, or if you’re nearing the end of the month and you want to avoid potential overage charges.

You can also disable cellular data while roaming from here. Tap “Cellular Data Options” and you can choose to disable “Data Roaming”, if you like. Your iPhone won’t use data on potentially costly roaming networks when you’re traveling, and will only use data when connected to your carrier’s own network.

You don’t have to perform all of these tips, but each of them can help you stretch that data allowance. Reduce wasted data and you can use the rest for things you actually care about.

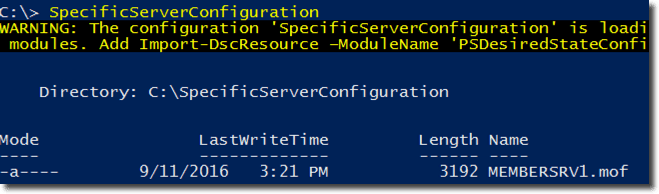

The composite configuration applied

The composite configuration applied

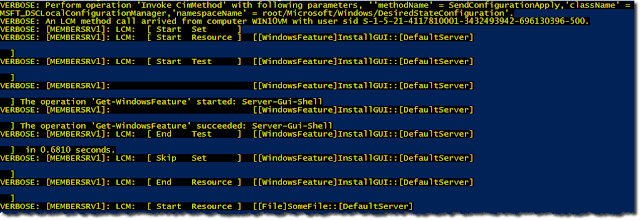

Azure MFA adapter

Azure MFA adapter