This guide describes in detail how to configure the various features of the KEMP LoadMaster using the WUI. This document also describes the Web User Interface (WUI) of the KEMP LoadMaster. The available menu options in the LoadMaster may vary from the ones described in this document. The features available in a LoadMaster depend on what license is in place.

KEMP Technologies products optimize web and application infrastructure as defined by high-availability, high-performance, flexible scalability, security and ease of management. KEMP Technologies products maximize the total cost-of-ownership for web infrastructure, while enabling flexible and comprehensive deployment options.

menu option displays the home page which presents a list of basic information regarding the LoadMaster.

From this point onwards, the headings in this document generally correspond to the options in the main menu on the left of the LoadMaster WUI.

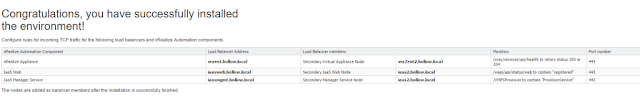

Figure 3‑1: Add a new Virtual Service screen.

Here the Virtual IP (VIP) address, port, protocol and name are defined. The VIP address, name and port are manually entered into the text boxes and the protocol is selected from the drop-down list.

drop-down list is available whereby you can select a template to configure the Virtual Service parameters such as port and protocol.

For the LoadMaster Exchange appliance there is a maximum limit of thirteen (13) Virtual Services that may be configured.

View/Modify (Existing HTTP Service)

![]()

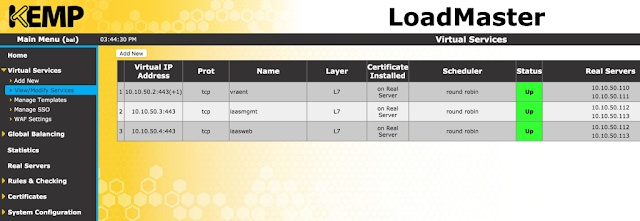

Figure 3‑2: Virtual Services screen

This screen displays a list of Virtual Services on the LoadMaster, summarizing the main properties of each and giving the options to modify or delete services, or create a new service.

CAUTION

Delete is permanent, there is no UNDO feature. Use with care.

Each configured Virtual Service may be changed by clicking the

Modify button or deleted by clicking the

Delete button.

The Virtual Service status may be one of the following:

- Up– At least one Real Server is available.

- Down– No Real Servers are available.

- Sorry– All Real Servers are down and traffic is routed to a separately configured Sorry Server that is not part of the Real Server set, with no health checking.

- Disabled– The service has been administratively disabled.

- Redirect– A fixed redirect response has been configured. Redirect Virtual Services can be created by using the Add a Port 80 Redirector VS option in the Advanced Properties section. For more information, refer to Section 3.6.

- Fail Message– A fixed error message has been configured. A fixed error message can be specified using the Not Available Redirection Handling options. Refer to Section 3.6 for more information.

- Unchecked– Health checking of the Real Servers has been disabled. All Real Servers are accessed and presumed UP.

- Security Down– The LoadMaster is unable to reach the Authentication Server and will prevent access to any Virtual Service which has Edge Security Pack (ESP).

- WAF Misconfigured– If the WAF for a particular Virtual Service is misconfigured, for example if there is an issue with a rule file, the status changes to WAF Misconfigured and turns red. If the Virtual Service is in this state, all traffic is blocked. AFP can be disabled for that Virtual Service to stop the traffic being blocked, if required, while troubleshooting the problem.

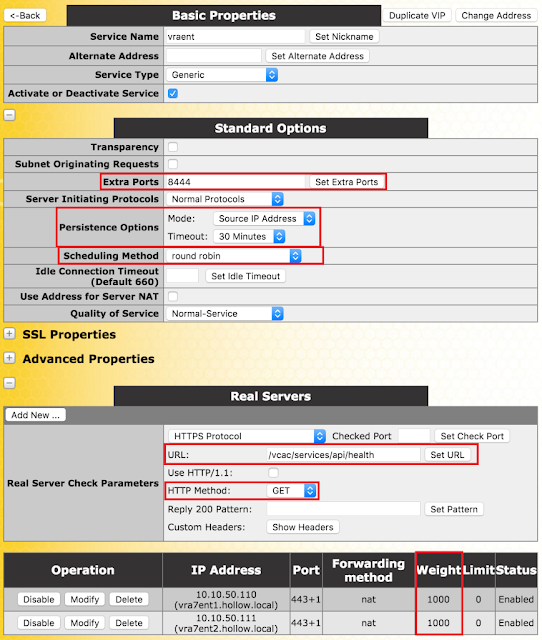

The image below shows the Virtual Service properties screen. It is composed of several component sections:

![]()

Figure 3‑3: Virtual Service Properties screen

- Basic Properties - where the usual and most common attributes are set

- Standard Options– the most widely used features of a Virtual Service

- SSL Properties– if SSL acceleration is being used,it will show Acceleration Enabled and this section of the screen will be used to configure the SSL functions

- Advanced Properties– the additional features for a Virtual Service

- WAF Options – where the options relating to the Application Firewall Pack (AFP) can be set

- ESP Options –where the options relating to ESP are set

- Real Servers/SubVSs– where Real Servers/SubVSs are assigned to a Virtual Server

Depending upon the service type, and enabled or disabled features, specific fields and options show in the WUI. The screenshots in this document may not represent every possible configuration.

Basic Properties

![]()

Figure 3‑4: Basic Properties section

There are two buttons adjacent to the

Basic Properties heading:

Duplicate VIPThis option makes a copy of the Virtual Service, including any related SubVSs. All Virtual Service configuration settings are copied to the duplicate Virtual Service. When this button is clicked, a screen appears where the IP address and port can be specified for the copied Virtual Service.

Change AddressClicking this button opens a screen where the virtual IP address and port of the Virtual Service can be modified.

The fields in the Virtual Service modify screen are:

Service NameThis text box allows you to assign a nickname to the Virtual Service being created, or change an existing one.

In addition to the usual alphanumeric characters, the following ‘special’ characters can be used as part of the Service Name:

. @ - _

However, there must be at least one alphanumeric character before the special characters.

This is where, if so desired, you would specify a secondary address in either IPv6 or IPv4 format.

Service TypeSetting the

Service Type controls the options displayed for the Virtual Service. It’s important to make sure the Service Type is set according to the type of application that you are load balancing.

WebSocket Virtual Services must be get to the Generic Service Type.

The HTTP/2 Service Type allows HTTP/2 traffic - but does not currently offer any Layer 7 options beyond address translation (transparency, subnet originating, alternate source).

This check box gives you the option to activate or deactivate a Virtual Service. The default (active) is selected.

Standard Options

![]()

Figure 3‑5: Standard Options section

Force L7If visible,

Force L7 should be selected (default). If it is not selected, the Virtual Service will be forced to Layer 4.

L7 TransparencyEnabling this option makes the Virtual Service transparent (NO NAT). However, if the client resides on the same subnet as the Virtual IP and Real Servers, then the Virtual Services will automatically NAT the source IP (enabling non-transparency).

If the

Real Servers considered local option is enabled, then the Real Servers, within a two-armed configuration, are considered local even if they are on a different arm of the configuration.

Subnet Originating RequestsThis option is only available if Transparency is not enabled.

When transparency is not enabled, the source IP address of connections to the Real Servers is that of the Virtual Service. When transparency is enabled, the source IP address will be the IP address that is initiating connection to the Virtual Service. If the Real Server is on a subnet, and the

Subnet Originating Requests option is enabled, then the subnet address of the LoadMaster will be used as the source IP address.

This switch allows control of subnet originating requests on a per-Virtual Service basis. If the global switch (

Subnet Originating Requests in

System Configuration > Miscellaneous Options > Network Options in the main menu) is enabled then it is enabled for all Virtual Services.

It is recommended that the Subnet Originating Requests option is enabled on a per-Virtual Service basis.

If the global option is not enabled, it can be controlled on a per-Virtual Service basis.

If this option is switched on for a Virtual Service that has SSL re-encryption enabled, all connections currently using the Virtual Service will be terminated.

Extra PortsYou may specify a range of ports, sequential or otherwise, starting with the base port already configured for the Virtual Service. The port numbers are inputted to the field and separated with a space, and the maximum range is 510 ports.

You can enter the extra ports either as port ranges or single ports separated by spaces or comma in whatever order you wish, for example, entering the list

8000-8080, 9002, 80, 8050, 9000 will add the ports 80, 8000 to 8080, 9000 and 9002.

Server Initiating ProtocolsBy default, the LoadMaster will not initiate a connection with a Real Server until it has received some data from a client. This prohibits certain protocols from working as they need to communicate with the Real Server before transmitting data.

If the Virtual Service uses one of these protocols then select the protocol from the drop-down list to enable it to work correctly.

The protocols that can be selected are:

- SMTP

- SSH

- IMAP4

- MySQL

- POP3

- Other Server Initiating Protocols

The Server Initiating Protocols option is not visible when the port specified in the Virtual Service is 80, 8080 or 443.

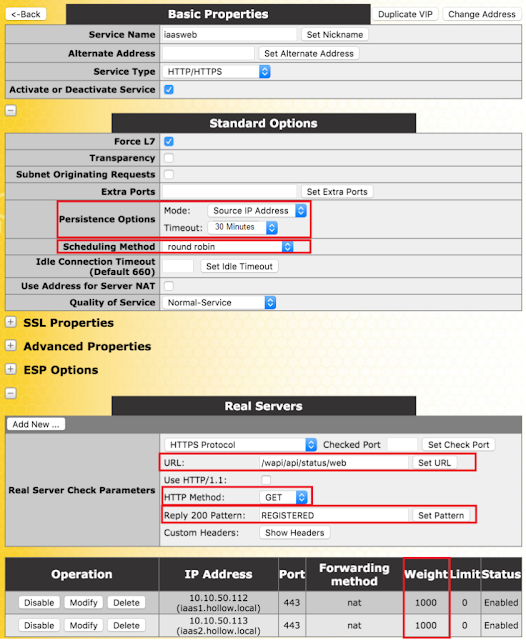

Persistence OptionsPersistence is setup on a per Virtual Service basis. This section allows you to select whether persistence is enabled for this service, to set the type of persistence and the persistence timeout value.

If persistence is enabled it means that a client connection to a particular Real Server via the LoadMaster is persistent, in other words - the same client will subsequently connect to the same Real Server. The timeout value determines for how long this particular connection is remembered.

The drop-down list gives you the option to select the type of persistence. These are:

The source IP address (of the requesting client) is used as the key for persistency in this case.

Super HTTP is the recommended method for achieving persistence for HTTP and HTTPS services with the LoadMaster. It functions by creating a unique fingerprint of the client browser and uses that fingerprint to preserve connectivity to the correct Real Server. The fingerprint is based on the combined values of the User-Agent field and, if present, the Authorization header. Connections with the same header combination will be sent back to the same Real Server.

The LoadMaster checks the value of a specially set cookie in the HTTP header. Connections with the same cookie will go to the same Real Server.

- Server Cookie or Source IP:

If cookie persistence fails, it reverts to source-based persistence.

The LoadMaster automatically sets the special cookie.

- Active Cookie or Source IP:

If active cookie persistence fails, it reverts to source-based persistence.

The Hash All Cookies method creates a hash of the values of all cookies in the HTTP stream. Cookies with the same value will be sent to the same server for each request. If the values change, then the connection will be treated as a new connection and the client will be allocated to a server according to the load balancing algorithm.

- Hash All Cookies or Source IP:

Hash All Cookies or Source IP is identical to Hash All Cookies, with the additional feature that it will fall back to Source IP persistence in the event no cookies are in the HTTP string.

- Super HTTP and Source IP Address:

This is the same as super HTTP but it also appends the source IP address to the string, thus improving the distribution of the resulting HASH.

With URL Hash persistence, the LoadMaster will send requests with the same URL to the same server.

With HTTP Host Header persistence, the LoadMaster will send all requests that contain the same value in the HTTP Host: header to the same server.

This method operates in exactly the same manner as Server Persistence, except that the named item being inspected is a Query Item in the Query String of the URL. All queries with the same Query Item value will be sent to the same server.

With Selected Header persistence, the LoadMaster will send all requests that contain the same value in the specified header to the same server.

Each session over SSL has its own session ID which can be persisted on.

For this option to appear as a persistence method, the Virtual Service needs to have a Service Type of Generic and SSL acceleration must be disabled.

If a Virtual Service is an SSL service and not offloaded, the LoadMaster cannot meaningfully interact with any of the data in the stream at Layer 7. The reason is, the data is encrypted and the LoadMaster has no way of decrypting it.

If, in the above scenario, a persistence mode that is not based off source IP is required, this is the only other option. When an SSL session is started, it generates a session ID for the connection. This session ID can be used to cause the client to persist to the correct server.

There are some downsides to this however, as most modern browsers regenerate the session ID at very short intervals, basically overwriting it, even if there is a longer interval set on the persist timeout.

- UDP Session Initiation Protocol (SIP):

This persistence mode is only available in a UDP Virtual Service when Force L7 is enabled. SIP uses request and response transactions, similar to HTTP. An initial INVITE request is sent, which contains a number of header fields. These header fields can be used for persistence.

TimeoutWhen any persistence mode is selected, a

Timeout drop-down list appears. This allows you to set the length of time after the last connection that the LoadMaster will remember the persistence information.

Header field nameWhen

UDP Session Initiation Protocol is selected as the persistence mode is selected sin the LoadMaster, a text box called

Header field name will appear. The header field that is to be used as the basis for the persistence information should be entered here.

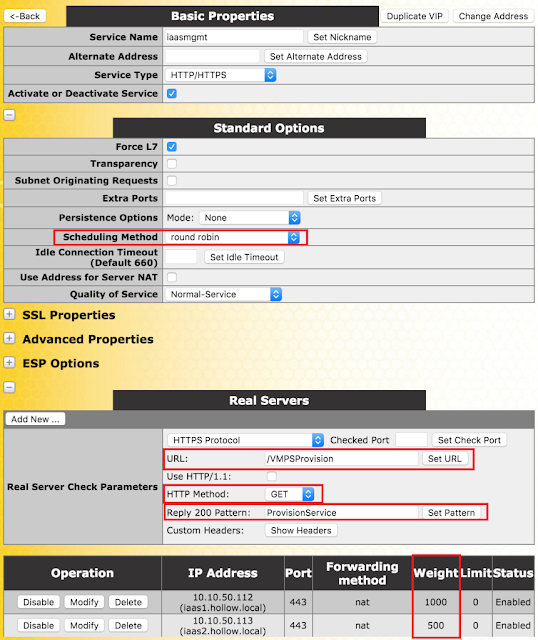

Scheduling MethodsThis section allows you to select the method by which the LoadMaster will select a Real Server, for this particular service. The scheduling methods are as follows:

Round Robin causes the LoadMaster to assign Real Servers to a session in order, i.e. the first session connects to Real Server 1, the second to Real Server 2 etc. There is no bias in the way the Real Servers are assigned.

This method uses the weight property of the Real Servers to determine which Real Servers get preference. The higher the weight a Real Server has, the higher the proportion of connections it will receive.

With this method, the current Real Server with the fewest open connections is assigned to the session.

- Weighted Least Connection:

As with Least Connection, but with a bias relative to the weight.

- Resource Based (Adaptive):

Adaptive scheduling means that the load on the Real Servers is periodically monitored and that packets are distributed such that load will be approximately equal for all machines. More details can be found in the section covering scheduling methods.

- Resource Based (SDN Adaptive):A Virtual Service which is using an adaptive scheduling method (whether using SDN or not) can be viewed as a control system. The intent is to achieve an evenly distributed load over the Real Servers and the controller calculates an error value from this (that describes the deviation from the desired even distribution). It also calculates a set of control values (Real Server weights) that are fed back into the system in a way to decrease the error value.

All traffic goes to highest weight Real Server that is available. Real Servers should be weighted at the time they are create and no two Real Servers should have same weight, otherwise unpredictable results may occur.

Every 15 seconds the LoadMaster measures the time it takes for a response to arrive for a health check probe and uses this time to adjust the weights of the Real Servers accordingly, i.e. a faster response time relative to the other Real Servers leads to a higher weight which in turn leads to more traffic sent to that server.

Instead of using the weights or doing round robin, a hash of the source IP is generated and used to find the correct real server. This means that the real server is always the same from the same host.You do not need any source IP persistence.

Because this method relies solely on the client (source) IP address and ignores current server load, using this method can lead to a particular Real Server becoming overloaded, or a general traffic imbalance across all Real Servers.

Idle Connection Timeout (Default 660)The seconds before an idle connection is closed. There are some special values that can be set for this field:

- Setting it to 0 will ensure that the default L7 connection timeout will be used. The default Connection Timeout value can be modified by going to System Configuration > Miscellaneous Options > Network Options.

- Setting it to 1 will discard the connection after the packet is first forwarded – a response is not expected or handled

- Setting it to 2 will use a DNS type of operation. The connection is dropped after the reply message.

Setting the Idle Connection Timeout to the special values of 1 or 2 allow better performance and memory usage for UDP connections and they correspond better to how UDP is used.

Quality of ServiceThe

Quality of Service drop-down sets a Differentiated Services Code Point (DSCP) in the IP header of packets that leave the Virtual Service. This means that the next device or service that deals with the packets will know how to treat and prioritise this traffic. Higher priority packets are sent from the LoadMaster before lower priority packets.

The different options are described below:

- Normal-Service: No special priority given to the traffic

- Minimize-Cost: Used when data needs to be transferred over a link that has a lower “cost”

- Maximize-Reliability: Used when data needs to travel to the destination over a reliable link and with little or no retransmission

- Maximize-Throughput: Used when the volume of data transferred during an interval is important, even if the latency over the link is high

- Minimize-Delay: Used when the time required (latency) for the packet to reach the destination must be low. This option has the quickest queue of each of the Quality of Service choices.

The Quality of Service feature only works with Layer 7 traffic. It does not work with Layer 4 traffic.

Use Address for Server NATBy default, when the LoadMaster is being used to SNAT Real Servers, the source IP address used on the internet is that of the LoadMaster. The

Use Address for Server NAT option allows the Real Servers configured on the Virtual Service to use the Virtual Service as the source IP address instead.

This option is most useful for services such as SMTP when the LoadMaster is in a public domain and when the service requires a reverse DNS check to see if the source address sent from the LoadMaster is the same as the Mail Exchanger (MX) record of the sender.

If the Real Servers are configured on more than one Virtual Service which has this option set, only connections to destination port

80 will use this Virtual Service as the source IP address.

The Use Address for Server NAT option only works on Virtual Services which are operating on the default gateway. This option is not supported on non-default gateway interfaces.

SSL Properties

![]()

Figure 3‑6: SSL Properties section

SSL AccelerationThis checkbox appears when the criteria for SSL Acceleration have been met, and serves to activate SSL Acceleration.

Enabled: If the

Enabled check box is selected, and there is no certificate for the Virtual Service, you will be prompted to install a certificate. A certificate can be added by clicking the

Manage Certificates button and importing or adding a certificate.

Reencrypt: Selecting the

Reencrypt checkbox re-encrypts the SSL data stream before sending it to the Real Server.

Reversed: Selecting this checkbox will mean that the data from the LoadMaster to the Real Server is re-encrypted. The input stream must not be encrypted. This is only useful in connection with a separate Virtual Service which decrypts SSL traffic then uses this Virtual Service as a Real Service and loops data back to it. In this way, the client to real server data path is always encrypted on the wire.

Supported ProtocolsThe checkboxes in the

Supported Protocols section allow you to specify which protocols should be supported by the Virtual Service. By default, the three TLS protocols are enabled and SSLv3 is disabled.

Require SNI hostnameIf require Server Name Indication (SNI) is selected, the hostname will always be required to be sent in the TLS client hello message.

When

Require SNI hostname is disabled, the first certificate will be used if a host header match is not found.

When

Require SNI hostname is enabled, a certificate with a matching common name must be found, otherwise an SSL error is yielded. Wildcard certificates are also supported with SNI.

When using a Subject Alternative Name (SAN) certificate, alternate source names are not matched against the host header.

Wildcard certificates are supported but please note that the root domain name will not be matched as per RFC 2459. Only anything to the left of the dot will be matched. Additional certificates must be added to match the root domain names. For example,

www.kemptechnologies.com will be matched until a wildcard of *.kemptechnologies.com. Kemptechnologies.com will not be matched.

To send SNI host information in HTTPS health checks, please enable Use HTTP/1.1 in the Real Servers section of the relevant Virtual Service(s) and specify a host header. If this is not set, the IP address of the Real Server will be used.

CertificatesAvailable certificates will be listed in the

Available Certificates select list on the left. To assign or unassign a certificate, select it and click the right or left arrow button. Then click

Set Certificates. Multiple certificates can be selected by holding

Ctrl on your keyboard and clicking each required certificate.

Reencryption Client CertificateWith SSL connections, the LoadMaster gets a certificate from the client and also gets a certificate from the server. The LoadMaster transcribes the client certificate in a header and sends the data to the server. The server still expects a certificate. This is why it is preferable to install a pre-authenticated certificate in the LoadMaster.

Reencryption SNI HostnameSpecify the Server Name Indication (SNI) hostname that should be used when connecting to the Real Servers.

This field is only visible when SSL re-encryption is enabled.

Cipher SetA cipher is an algorithm for performing encryption or decryption.

Each Virtual Service (which has

SSL Acceleration enabled) has a cipher set assigned to it. This can either be one of the system-defined cipher sets or a user-customized cipher set. The system-defined cipher sets can be selected to quickly and easily select and apply the relevant ciphers.

The system-defined cipher sets are as follows:

- Default: The current default set of ciphers in the LoadMaster.

- Default_NoRc4: The Default_NoRc4 cipher set contains the same ciphers as the default cipher set, except without the RC4 ciphers (which are considered to be insecure).

- BestPractices: This is the recommended cipher set to use. This cipher set is for services that do not need backward compatibility - the ciphers provide a higher level of security. The configuration is compatible with Firefox 27, Chrome 22, IE 11, Opera 14 and Safari 7.

- Intermediate_compatibility: For services that do not need compatibility with legacy clients (mostly Windows XP), but still need to support a wide range of clients, this configuration is recommended. It is compatible with Firefox 1, Chrome 1, IE 7, Opera 5 and Safari 1.

- Backward_compatibility: This is the old cipher suite that works with clients back to Windows XP/IE6. This should be used as a last resort only.

- FIPS: Ciphers which conform to FIPS (Federal Information Processing Standards).

- Legacy: This is the set of ciphers that were available on the old LoadMaster firmware (v7.0-10) before OpenSSL was updated.

Refer to the

SSL Accelerated Services, Feature Description for a full list of the ciphers supported by the LoadMaster, and a breakdown of what ciphers are in each of the system-defined cipher sets.

KEMP Technologies can change the contents of these cipher sets as required based on the best available information.

The list of ciphers which are assigned to a Virtual Service can be edited by clicking the

Modify Cipher Set button. If changes are made to a preconfigured cipher set, a new custom cipher set will be created. Custom cipher sets can be named and can be used across different Virtual Services.

By default, the name for the custom cipher set will be

Custom_. KEMP recommends changing the name of custom cipher sets because if another system-defined cipher set is modified, the name will again default to

Custom_and will overwrite any existing cipher sets with that name.

It is not possible to modify the list of ciphers in a system-defined cipher set. Instead, a new custom cipher set will be created when changes are made to the ciphers list.

It is not possible to delete a custom cipher set in the LoadMaster WUI. However, it is possible to delete a cipher set using the RESTful API.

CiphersWhen a cipher set is selected and applied, the

Ciphers list is read only. To modify the ciphers that are assigned to a Virtual Service, either change the assigned

Cipher Set or click

Modify Cipher Set.

When modifying a cipher set, available ciphers are listed on the left. Ciphers can be assigned or unassigned by selecting them and clicking the right or left arrow buttons. Then, specify a name for the custom cipher set and click

Save Cipher Set. Multiple ciphers can be selected by holding the

Ctrl key on your keyboard and selecting the required ciphers.

Client Certificates- No Client Certificates required: enables the LoadMaster to accept HTTPS requests from any client. This is the recommended option.

By default the LoadMaster will accept HTTPS requests from any client. Selecting any of the other values below will require all clients to present a valid client certificate. In addition, the LoadMaster can also pass information about the certificate to the application.

This option should not be changed from the default of No Client Certificates required. Only change from the default option if you are sure that all clients that access this service have valid client certificates.

- Client Certificates required: requires that all clients forwarding a HTTPSrequest must present a valid client certificate.

- Client Certificates and add Headers: requires that all clients forwarding a HTTPS request must present a valid client certificate. The LoadMaster also passes information about the certificate to the application by adding headers.

- The below options send the certificate in its original raw form. The different options let you specify the format that you want to send the certificate in:

- Client Certificates and pass DER through as SSL-CLIENT-CERT

- Client Certificates and pass DER through as X-CLIENT-CERT

- Client Certificates and pass PEM through as SSL-CLIENT-CERT

- Client Certificates and pass PEM through as X-CLIENT-CERT

Verify Client using OCSPVerify (via Online Certificate Status Protocol (OCSP)) that the client certificate is valid.

This option is only visible when ESP is enabled.

Advanced Properties

![]()

Figure 3‑7: Advanced Properties section

Content SwitchingClicking the

Enable button, enables rule-based Content Switching on this Virtual Service. Once enabled,

rules must be assigned to the various Real Servers. Rules can be attached to Real Server by clicking the

None button located next the Real Server. Once rules are attached to a Real Server the

None button will display the count of rules attached.

Rules PrecedenceClicking the

Rules Precedence button displays the order in which Content Switching rules are applied. This option only appears when Content Switching and when rules are assigned to the Real Server(s).

![]()

Figure 3‑8: Request Rules

This screen shows the Content Switching rules that are assigned to the Real Servers of the Virtual Services and the order in which they apply. A rule may be promoted in the order of precedence by clicking its corresponding

Promote button.

HTTP Selection RulesShow the selection rules that are associated with the Virtual Service.

HTTP Header Modifications Clicking the

Show Header Rules button displays the order in which Header Modification rules are implemented. The number of rules (of both request and response type) is displayed on the actual button.

![]()

Figure 3‑9: Modification Rules

From within the screen you can

Add and

Delete Header Modification rules. The order in which the rules are applied can be changed by clicking the

Promote buttons.

Enable CachingThis option enables caching of static content. This saves valuable Real Server processing power and bandwidth. Caching can be enabled per HTTP and offloaded HTTPS Virtual Services.

Types of file that can be cached may be defined in AFE configuration under the Systems Configuration> Miscellaneous Options menu.

Maximum Cache UsageThis option limits the size of the cache memory per Virtual Service. For example, two Virtual Services, each running with a limit of 50% will use 100% of the cache store. The default is

No Limit. It is recommended to limit the cache size to prevent unequal use of the cache store. Ensure that the cache maximum usage is adjusted so that each Virtual Service has a percentage of cache to use. If there is not remaining space to be allocated for a cache enabled Virtual Service, that service will not cache content.

Enable CompressionFiles sent from LoadMaster are compressed with Gzip.

If compression is enabled without caching, LoadMaster performance may suffer.

The types of file that can be compressed may be defined in AFE configuration in the

Systems Configuration> Miscellaneous section of the LoadMaster WUI.

Compression is not recommended for files 100MB or greater in size

Detect Malicious RequestsThe Intrusion Prevention System (IPS) service will provide in-line protection of Real Server(s) by providing real-time mitigation of attacks and isolation of Real Server(s). Intrusion prevention is based on the industry standard SNORT database and provides real-time intrusion alerting.

Selecting the

Detect Malicious Requests check box enables the IPS per HTTP and offloaded HTTPS Virtual Services. There are two options for handling of requests that match a SNORT rule.

Drop Connection, where a rule match will generate no HTTP response, or

Send Reject, where a rule match will generate a response to the client of HTTP 400 “Invalid Request”. Both options prevent the request from reaching the Real Server(s).

Enable Multiple ConnectEnabling this option permits the LoadMaster to manage connection handling between the LoadMaster and the Real Servers. Requests from multiple clients will be sent over the same TCP connection.

Multiplexing only works for simple HTTP GET operations. The Enable Multiple Connect check box will not be available in certain situations, for example if WAF, ESP or SSL Acceleration is enabled.

Port FollowingPort following enables a switch from an HTTP connection to an HTTPS (SSL) connection to be persistent on the same Real Server. Port following is possible between UDP and TCP connections.

To switch on port following, the following must be true:

- The Virtual Service where port following is being switched on must be an HTTPS service

- There must be a HTTP service

- Both of these Virtual Services must the same Layer 7 persistence modeselected, i.e. Super HTTP or Source IP Address persistence

Port following is not available on SubVSs.

Add Header to RequestInput the key and the value for the extra header that is to be inserted into every request sent to the Real Servers.

Click the

Set Header button to implement the functionality.

Add HTTP HeadersThe Add HTTP Headers drop-down list is only available when SSL offloading (SSL Acceleration) is enabled.

This option allows you to select which headers are to be added to the HTTP stream. The options available include:

- Legacy Operation(XXX)

- None

- X-Forwarded-For

- X-Forwarded-For (No Via)

- X-ClientSide

- X-ClientSide (No Via)

- Via Only

In the Legacy operation, if the system is in HTTP kernel mode, then a header is added. Otherwise nothing is done. For the other operation methods, then the system is forced into HTTP kernel mode and the specified operation is performed.

Sorry ServerEnter the IP Address and Port number in the applicable fields. If no Real Servers are available, the LoadMaster will redirect to a specified location, with no checking. The IP address of a Sorry Server must be on a network or subnet that is defined on the LoadMaster.

When using a Layer 7 Virtual Service with transparency enabled, the Sorry Server should be on the same subnet as the Real Server.

Not Available Redirection HandlingWhen no Real Servers are available to handle the request you can define the error code and URL that the client should receive.

- Error Code: If no Real Servers are available, the LoadMaster can terminate the connection with a HTTP error code. Select the appropriateerror code.

- Redirect URL: When there are no Real Servers available and an error response is to be sent back to the client, a redirect URL can also be specified. If the string entered in this text box does not include http:// or https:// the string is treated as being relative to the current location, so the hostname will be added to the string in the redirect. This field also supports the use of wildcards such as %h and %swhich represent the requested hostname and Uniform Resource Identifier (URI) respectively.

- Error Message: When no Real Servers are available and an error response is to be sent back to the client, the specified error message will be added to the response.

For security reasons, the returned HTML page only returns the text

Document has moved. No request-supplied information is returned.

- Error File:When no Real Servers are available and an error response is to be sent back to the client, the specified file will be added to the response. This enables simple error HTML pages to be sent in response to the specified error.

The maximum size of this error page is 16KB.

Not Available Server/Port![]()

Figure 3‑10: Not Available Server

In a UDP Virtual Service there is an option to specify a

Not Available Server and

Port. When there are no Real Servers available to handle the request this option defines the URL that the client will receive.

The value of the Not Available Server can only be changed for UDP if the service is not currently using the Not Available Server.

Add a Port 80 Redirector VSIf no port 80 Virtual Service is configured, one can be created. It will then redirect the client to the URL specified in the

Redirection URL: field.

Click the

Add HTTP Redirector button to implement the redirector.

When the Add HTTP Redirector button is clicked, a redirect Virtual Service is created and this WUI option disappears from the relevant Virtual Service.

Default GatewaySpecify the Virtual Service-specific gateway to be used to send responses back to the clients. If this is not set, the global default gateway will be used.

Click the

Set Default Gateway button to implement the default gateway.

If the global Use Default Route Only option is set in System Configuration > Miscellaneous Options > Network Options, traffic from Virtual Services that have the Default Gateway set will be only routed to the interface where the Virtual Service’s default route is located. This can allow the LoadMaster to be directly connected to client networks without returning traffic directly using the adjacent interface.

Alternate Source AddressesIf no list is specified, the LoadMaster will use the IP address of the Virtual Service as its local address. Specifying a list of addresses ensures the LoadMaster will use these addresses instead.

Click the

Set Alternate Source Addresses button to implement the Alternate Source Addresses.

This option is only available if the Allow connection scaling over 64K Connections option is enabled in the L7 Configurationscreen.

Service Specific Access ControlAllows you to change the Virtual Service-specific

Access Control lists.

If you implement the Access Control Lists option, the Extra Ports option will not work correctly.

Web Application Firewall (WAF) Options

![]()

Figure 3‑11: AFP Options

The Web Application Firewall (WAF) feature must be enabled before you can configure these options.

![]()

Figure 3‑12: Enable AFP

To enable WAF, select the Enabled check box. A message will be displayed next to the Enabled check box displaying how many WAF-enabled Virtual Services exist and it will also display the maximum number of WAF-enabled Virtual Services that can exist. If the maximum number of WAF-enabled Virtual Services have been reached, the Enabled check box will be greyed out.

Utilizing WAF can have a significant performance impact on your LoadMaster deployment. Please ensure that the appropriate resources are allocated.

For virtual and bare metal LoadMaster instances, a minimum of 2GB of allocated RAM is required for operation of AFP. The default memory allocation for Virtual LoadMasters and LoadMaster Bare Metal instances prior to LoadMaster Operating System version 7.1-22 is 1GB of RAM. If this default allocation has not been changed please modify the memory settings before attempting to proceed with AFP configuration.

Select the default operation of the WAF:

- Audit Only: This is an audit-only mode – logs will be created but requests and responses are not blocked.

- Block Mode: Either requests or responses are blocked.

Audit modeSelect what logs to record:

- No Audit: No data is logged.

- Audit Relevant: Logs data which is of a warning level and higher. This is the default option for this setting.

- Audit All: Logs all data through the Virtual Service.

Selecting the Audit All option produces a large amount of log data. KEMP does not recommend selecting the Audit All option for normal operation. However, the Audit All option can be useful when troubleshooting a specific problem.

Inspect HTML POST Request ContentEnable this option to also process the data supplied in POST requests.

Two additional options (Disable JSON Parser and Disable XML Parser) only become available if Inspect HTML Post Request Content is enabled.

Disable JSON ParserDisable processing of JavaScript Object Notation (JSON) requests.

Disable XML ParserDisable processing of XML requests.

Process ResponsesEnable this option to verify responses sent from the Real Servers.

This can be CPU and memory intensive.

If a Real Server is gzip encoding, WAF will not check that traffic, even if Process Responses is enabled.

Hourly Alert Notification ThresholdThis is the threshold of incidents per hour before sending an alert. Setting this to

0 disables alerting.

RulesThis is where you can assign/un-assign generic, custom, application-specific and application-generic rules to/from the Virtual Service.

You cannot assign application-specific and application-generic rules to the same Virtual Service.

Edge Security Pack (ESP) Options

The ESP feature must be enabled before you can configure these options. To enable the ESP function, please select the

Enable ESP check box.

![]()

Figure 3‑13: SP Options section

The full

ESP Options screen will appear.

The ESP feature can only be enabled if the Virtual Service is a HTTP, HTTPS or SMTP Virtual Service

![]()

Figure 3‑14: ESP Options

Enable ESPEnable or disable the ESP feature set by selecting or removing the checkmark from the

Enable ESP checkbox.

ESP LoggingThere are three types of logs stored in relation to the ESP feature. Each of these logs can be enabled or disabled by selecting or deselecting the relevant checkbox. The types of log include:

- User Access:logs recording all user logins

- Security: logs recording all security alerts

- Connection:logsrecording each connection

Logs are persistent and can be accessed after a reboot of the LoadMaster.

Client Authentication ModeSpecifies how clients attempting to connect to the LoadMaster are authenticated. The following types of methods are available:

- Delegate to Server:the authentication is delegated to the server

- Basic Authentication: standard Basic Authentication is used

- Form Based: clients must enter their user details within a form to be authenticated on the LoadMaster

- Client Certificate: clients must present the certificate which is verified against the issuing authority

- NTLM: NTLM credentials are based on data obtained during the interactive logon process and consist of a domain name and a user name

The remaining fields in the ESP Options section will change based on the Client Authentication Mode selected.

SSO DomainSelect the Single Sign-On (SSO) Domain within which the Virtual Service will be included.

An SSO Domain must be configured in order to correctly configure the ESP feature.

Only SSO domains with the Configuration type of Inbound Configuration will be shown as options in this SSO Domain field.

Alternative SSO Domains Many organizations use extranets to share information with customers and partners. It is likely that extranet portals will have users from two or more Active Directory domains. Rather than authenticating users from individual domains one at a time, assigning

Alternative SSO Domains gives the ability to simultaneously authenticate users from two or more domains using one Virtual Service.

This option appears only when more than one domain has been configured.

Currently this option is available for domains which are configured with the following

Authentication Protocols:

- LDAP

- RSA-SecurID

- Certificates

![]()

Figure 3‑15: Enabled and Reencrypt tick boxes selected

Before configuring the

ESP Options to use

Alternative SSO Domains ensure that, in the

SSL Properties section, the

Enabled and

Reencrypt tick boxes are selected.

![]()

Figure 3‑16: Available Domains

The domain name which appears in the

SSO Domain drop-down list is the default domain. This is also the domain which will be used if only one is configured.

Previously configured alternative domains appear in the

Available Domain(s) list.

![]()

Figure 3‑17: Alternative Domains (SECOND and THIRD) Assigned to the Virtual Service.

To assign alternative SSO Domains:

- Highlight each of the domains you wish to assign and click the > button.

An assigned domain is a domain which can be authenticated using a particular Virtual Service.

All domains which appear as available may be assigned to a Virtual Service.

- Click the Set Alternative SSO Domains button to confirm the updated list of Assigned Domain(s).

- Choose Basic Authentication from the Server Authentication Mode drop-down list.

When logging in to a domain using the ESP form, users should enter the name of the SSO Domain if an alternative domain needs to be accessed. If no domain name is entered in the username, users are, by default, logged on the domain entered in the default SSO Domain drop-down list.

To view the status of the Virtual Services, click

Virtual Services and

View/Modify Services in the main menu.

A list of the

Virtual Services displays showing the current status of each service.

If alternative domains are assigned and there is an issue with a particular domain, the affected domain name is indicated in the

Status column.

Allowed Virtual HostsThe Virtual Service will only be allowed access to specified virtual hosts. Any virtual hosts that are not specified will be blocked.

Enter the virtual host name(s) in the

Allowed Virtual Hosts field and click the

Set Allowed Virtual Hosts button to specify the allowed virtual hosts.

Multiple domains may be specified within the field allowing many domains to be associated with the Single Sign On Domain.

The use of regular expressions is allowed within this field.

If this field is left blank, the Virtual Service will be blocked.

Allowed Virtual DirectoriesThe Virtual Service will only be allowed access to the specified virtual directories, within the allowed virtual hosts. Any virtual directories that are not specified will be blocked.

Enter the virtual directory name(s) in the

Allowed Virtual Directories field and click the

Set Allowed Virtual Directories button to specify the allowed virtual directories.

The use of regular expressions is allowed within this field.

Pre-Authorization Excluded DirectoriesAny virtual directories specified within this field will not be pre-authorized on this Virtual Service and will be passed directly to the relevant Real Servers.

Permitted GroupsSpecify the groups that are allowed to access this Virtual Service. When set, if a user logs in to a service published by this Virtual Service, the user must be a member of at least one of the groups specified. Up to 10 groups are supported per Virtual Service. Performance may be impacted if a large number of groups are entered. Groups entered in this field are validated via an LDAP query.

Some guidelines about this field are as follows:

- The group(s) specified must be valid groups on the Active Directory in the SSO domain associated with the Virtual Service. The SSO domain in the LoadMaster must be set to the directory for the groups. For example, if the SSO domain in the LoadMaster is set to webmail.example and webmail is not the directory for the groups, it will not work. Instead, the SSO domain may need to be set to .example.com.

- The group(s) listed must be separated by a semi-colon

A space-separated list does not work because most groups contain a space in the name, for example Domain Users.

- The following characters are not allowed in permitted group names:/ : + *

- The authentication protocol of the SSO domain must be LDAP

- The groups should be specified by name, not by full distinguished name

Include Nested GroupsThis field relates to the

Permitted Groups setting. Enable this option to include nested groups in the authentication attempt. If this option is disabled, only users in the top-level group will be granted access. If this option is enabled, users in both the top-level and first sub-level group will be granted access.

SSO Image SetThis option is only available if

Form Based is selected as the

Client Authentication Mode. You can choose which form to use to gather the Username and Password. There are three form options,

Exchange,

Blank and

Dual Factor Authentication. There are also options to display the form and error messages in other languages.

![]()

Figure 3‑18: Exchange form

The

Exchange Form contains the KEMP Logo

![]()

Figure 3‑19: Blank form

The

Blank Form does not contain the large KEMP logo.

- Dual Factor Authentication

![]()

Figure 3‑20: Dual Factor Authentication form

The

Dual Factor Authentication form contains four fields - two for the remote credentials and two for the internal credentials.

Remote Credentials are credentials that are used to authenticate against remote authentication servers such as RADIUS, before allowing the user to authenticate against Domain Servers such as Active Directory servers.

Internal Credentials are credentials that are used to authenticate against the internal domain servers such as Active Directory Servers.

If the Authentication Protocol of the relevant SSO Domain is set to RADIUS and LDAP, the SSO Image Set must be set to Dual Factor Authentication.

SSO Greeting MessageThis option is only available if

Form Based is selected as the

Client Authentication Mode. The login forms can be further customized by adding text. Enter the text that you would like to appear on the form within the

SSO Greeting Message field and click the

SetSSO Greeting Message button. The message can have up to 255 characters.

The SSO Greeting Message field accepts HTML code, so you can insert an image if required.

The grave accent character ( ` ) is not supported. If this character is entered in the SSO Greeting Message, the character will not display in the output, for example a`b`c becomes abc.

Logoff StringThis option is only available if

Form Based is selected as the

Client Authentication Mode. Normally this field should be left blank. For OWA Virtual Services, the

Logoff String should be set to

/owa/logoff.owa or in customized environments, the modified

Logoff String needs to be specified in this text box.

If the URL to be matched contains sub-directories before the specified string, the logoff string will not be matched. Therefore the LoadMaster will not log the user off.

Display Public/Private Option![]()

Figure 3‑21: Public/private option

Enabling this check box will display a public/private option on the ESP log in page. Based on the option the user selected on the login form, the

Session timeout value will be set to the value specified for either public or private in the

Manage SSO Domain screen. If the user selects the private option their username will be stored for that session.

Use Session or Permanent CookiesThree options are available to select for this field:

- Session Cookies Only: This is the default and most secure option

- Permanent Cookies only on Private Computers: Sends session cookies on public computers

- Permanent Cookies Always: Sends permanent cookies in all situations

Specify if the LoadMaster should send session or permanent cookies to the users’ browser when logging in.

Permanent cookies should only be used when using single sign on with services that have sessions spanning multiple applications, such as SharePoint.

Server Authentication ModeThis field is only updatable when the Client Authentication Mode is set to Form Based.

Specifies how the LoadMaster is authenticated by the Real Servers. There are three types of methods available:

- None: no client authentication is required

- Basic Authentication: standard Basic Authentication is used

- KCD: KCD authentication is used

If

Delegate to Server is selected as the

Client Authentication Mode, then

None is automatically selected as the

Server Authentication mode. Similarly, if either

Basic Authentication or

Form Based is selected as the

Client Authentication Mode, then

Basic Authentication is automatically selected as the

Server Authentication mode.

Server Side configurationThis option is only visible when the Server Authentication mode value is set to KCD.

Select the SSO domain for the server side configuration. Only SSO domains which have the

Configuration type set to

Outbound Configuration are shown here.

SMTP Virtual Services and ESP

If you create an SMTP Virtual Service (with

25 as the port), the ESP feature is available when you select the

Enable ESP checkbox but with a reduced set of options.

![]()

Figure 3‑22: ESP Options

Enable ESPEnable or disable the ESP feature set by selecting or deselecting the

Enable ESP checkbox.

Connection LoggingLogging of connections can be enabled or disabled by selecting or deselecting the

Connection Logging checkbox.

Permitted DomainsAll the permitted domains that are allowed to be received by this Virtual Service must be specified here. For example, if you wish the Virtual Service to receive SMTP traffic from

john@kemp.com, then the

kemp.com domain must be specified in this field.

Sub Virtual Services

From within a Virtual Service you can create one or more ‘Sub Virtual Services’ (SubVS). A SubVS is linked to, and uses the IP address of, the ‘parent’ Virtual Service. The SubVSs may have different settings (such as health check methods, content rules etc.) to the parent Virtual Service and to each other.

This allows the grouping of related Virtual Services, all using the same IP address. This could be useful for certain configurations such as Exchange or Lync which typically are comprised of a number of Virtual Services.

Users with the Virtual Services permission can add a SubVS.

Users with the Real Server permission cannot add a SubVS.

![]()

Figure 3‑23: Real Servers section

To create a SubVS, within a Virtual Service configuration screen, expand the

Real Servers section and click the

Add SubVS button.

![]()

Figure 3‑24: SubVS created

A message appears stating that the SubVS has been created.

You cannot have Real Servers and SubVSs associated with the same Virtual Service. You can however, associate a Real Server with a SubVS.

![]()

Figure 3‑25: SubVS section

When the SubVS is created, the

Real Servers section of the Virtual Services configuration screen is replaced with a

SubVSs section.

All the SubVSs for the Virtual Service are listed here. The

Critical check box can be enabled to indicate that the SubVS is required in order for the Virtual Service to be considered available. If a non-critical SubVS is down, the Virtual Service is reported as up and a warning will be logged.

If a critical SubVS is down, a critical log will be generated and the Virtual Service will be marked as down. If the email options are configured, an email will be sent to the relevant recipients.

In all cases, if the Virtual Service is considered to be down and the Virtual Service has a sorry server or an error message configured, these will be used.

To modify the SubVS, click the relevant

Modify button. A configuration screen for the SubVS appears. This contains a subset of the configuration options available for a normal Virtual Service.

![]()

Figure 3‑26: Section of the SubVS modify screen

The SubVSs can also be modified by clicking the relevant

Modify button from within the main Virtual Services view. A Virtual Service with SubVSs is colored differently within the Virtual IP address section and the SubVSs are listed in the Real Server section. The SubVS details can be viewed by clicking the ‘parent’ Virtual Service to expand the view to include the SubVSs.

If you would like to remove a Virtual Service which contains SubVSs, you must remove the SubVSs first before you are able to delete the main service.

SubVSs may have different ESP configurations than their parent Virtual Service, however care must be taken to ensure that the parent Virtual Service and SubVS ESP options do not conflict.

View/Modify (Remote Terminal Service)

This section is not relevant to the LoadMaster Exchange product.

Properties of the Virtual Service include the Generic Type and also provide Remote Terminal specific options.

PersistenceIf the terminal servers support a Session Directory, the LoadMaster will use the "routing " supplied by the Session Directory to determine the correct host to connect to. The LoadMaster persistency timeout value is irrelevant here - it is a feature of the Session Directory.

The switch "IP address redirection" in the Session Directory configuration must not be selected in order for this to work.

Using Session Directory with LoadMaster is optional, in terms of persistence. If the client pre-populates the username and password fields in the initial request, then this value is stored on the LoadMaster. As long as these fields are still populated upon reconnect, the LoadMaster will look up the name and reconnect to the same server as the original connection. The persistence timeout is used to limit the time the information is kept on the LoadMaster.

If using

Terminal-Service or Source IP mode, then if neither of these two modes succeeds, then the source IP address will be used for persistency.

Service Check for the Virtual ServiceOnly three options are available;

ICMP,

TCP and

RDP. Remote Terminal Protocol (RDP) opens a TCP connection to the Real Server on the Service port (port 3389). The LoadMaster sends an a1110 Code (Connection Request) to the server. If the server sends an a1101 Code (Connection Confirm) then LoadMaster closes the connection and marks the server as active. If the server fails to respond within the configured response time for the configured number of times, or if it responds with a different status code, it is assumed dead.

Real Servers

This section allows you to create a Real Server and lists the Real Servers that are assigned to the Virtual Service. The properties of the Real Servers are summarized and there is also the opportunity to add or delete a Real Server, or modify the properties of a Real Server. When Content Switching is enabled, there is also the opportunity to add rules to, or remove rules from, the Real Server (see Add Rule).

Real Server Check ParametersThis provides a list of health checks for well-known services, as well as lower level checks for TCP/UDP or ICMP. With the service health checks, the Real Servers are checked for the availability of the selected service. With TCP/UDP the check is simply a connect attempt

.![]()

Figure 3‑27: Real Servers

Real Server Check ProtocolThe tables below describe the options that may be used to verify Real Server health. You may also specify a health check port on the Real Server. If none are specified here, it will default to the Real Server port.

When the

HTTP/HTTPS,

Generic and

STARTTLS protocols Service Types are selected, the following health check options are available.

| Method | Action |

| ICMP Ping | An ICMP ping is sent to the Real Server |

| HTTP | HTTP checking is enabled |

| HTTPS | HTTPS (SSL) checking is enabled |

| TCP | A basic TCP connection is checked |

| Mail | The SMTP (Simple Mail Transfer Protocol) is used |

| NNTP | The NNTP (Network News Transfer Protocol) is used |

| FTP | The FTP (File Transfer Protocol) is used |

| Telnet | The Telnet protocol is used |

| POP3 | The POP3 (Post Office Protocol – mail client protocol) is used |

| IMAP | The IMAP (Internet Message Access Protocol – mail client protocol) is used |

| Name Service (DNS) Protocol | The Name Service Protocol is used |

| Binary Data | Specify a hexadecimal string to send and specify a hexadecimal string to check for in the response |

| None | No checking performed |

When the

Remote Terminal Service Type is selected the following health check options are available.

| Method | Action |

| ICMP Ping | An ICMP ping is sent to the Real Server |

| TCP | A basic TCP connection is checked |

| Remote Terminal Protocol | An RDP Routing Token is passed to the Real Server.

This health check supports Network-Level Authentication. |

| None | No checking performed |

For a UDP virtual service, only the ICMP Ping and Name Service (DNS) Protocol options are available for use

Enhanced OptionsEnabling the

Enhanced Options check box provides an additional health check option –

Minimum number of RS required for VS to be considered up. If the

Enhanced Options check box is disabled (the default), the Virtual Service will be considered available if at least one Real Server is available. If the

Enhanced Options check box is enabled, you can specify the minimum number of Real Servers that must be available in order to consider the Virtual Service to be available.

Minimum number of RS required for VS to be considered upThis option will only appear if the Enhanced Options check box is enabled and if there is more than one Real Server.

Select the minimum number of Real Servers required to be available for the Virtual Service to be considered up.

If less than the minimum number of Real Servers is available, a critical log is generated. If some Real Servers are down but it has not reached the minimum amount specified, a warning is logged. If the email options are configured, an email will be sent to the relevant recipients.

Note that the system marks a Virtual Service as down whenever a Real Server that is marked as Critical becomes unavailable – even if Enhanced Options are enabled and there are more than the specified minimum number of Real Servers still available.

In all cases, if the Virtual Service is considered to be down and the Virtual Service has a sorry server or an error message configured, these will be used.

If the minimum number is set to the total number of Real Servers and one of the Real Servers is deleted, the minimum will automatically reduce by one.

When using content rules in a SubVS, the minimum number of Real Servers required has a slightly different meaning. A rule is said to be available and can be matched if and only if the number of available Real Servers with that rule assigned to them is greater than the limit. If the number of available Real Servers is below this limit, the rule can never be matched.

If a Real Server on a SubVS is marked as critical – the SubVS will be marked as down if that Real Server is down. However, the parent Virtual Service will not be marked down unless that SubVS is marked as critical.

HTTP or HTTPS Protocol Health Checking

When either the

HTTP Protocol or

HTTPS Protocol options are selected a number of extra options are available as described below.

![]()

Figure 3‑28: Real Servers section

The post data option only appears if the POST HTTP Method is selected.

The Reply 200 Pattern option only appears if either the POST or GETHTTP Method is selected

By default, the health checker tries to access the URL to determine if the machine is available. A different URL can be specified here.

Use HTTP/1.1By default, the LoadMaster uses HTTP/1.0. However you may opt to use

HTTP/1.1 which will operate more efficiently.

HTTP/1.1 HostThis field will only be visible if ‘Use HTTP/1.1’ is selected.

When using

HTTP/1.1 checking, the Real Servers require a hostname to be supplied in each request. If no value is set, then this value is the IP address of the Virtual Service.

To send SNI host information in HTTPS health checks, please enable

Use HTTP/1.1 in the

Real Servers section of the relevant Virtual Service(s) and specify a host header. If this is not set, the IP address of the Real Server will be used.

HTTP MethodWhen accessing the health check URL, the system can use either the

HEAD,

GET or

POST method.

Post DataThis field will only be available if the

HTTP Method is set to

POST. When using the

POST method, up to 2047 characters of POST data can be passed to the server.

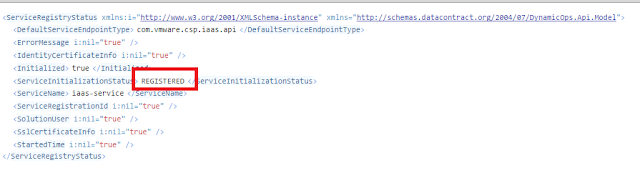

Reply 200 PatternWhen using the

GET or the

POST method, the contents of the returned response message can be checked. If the response contains the string specified by this Regular Expression, then the machine is determined to be up. The response will have all HTML formatting information removed before the match is performed. Only the first 4K of response data can be matched.

The LoadMaster will only check for this phrase if the reply from the server is a 200 code. If the reply is something else, the page will be marked as down without checking for the phrase. However, if the reply is a redirect (code 302), the page is not marked as down. This is because the LoadMaster assumes that the phrase will not be present and also it cannot take the service down, as the redirect would then become useless.

If the pattern starts with a carat ‘^’ symbol, it inverts the pattern response.

Both Regular Expressions and Perl Compatible Regular Expressions (PCRE) can be used to specify strings.

Custom HeadersHere you can specify up to 4 additional headers/fields which will be sent with each health check request. Clicking the

Show Headers button will show the entry fields. The first field is where you define the key for the custom header that is to be part of the health check request. The second field is the value of the custom header that is to be sent as part of the health check request. Once the information is input, click the

Set Header button.

Each of the headers can be up to a maximum of 20 characters long and the fields can be up to a maximum of 100 characters long. However, the maximum allowed number of characters in total for the 4 header/fields is 256.

The following special characters are allowed in the

Custom Headers fields:

; . ( ) / + = - _If a user has specified

HTTP/1.1, the Host field is sent as before to the Real Server. This can be overridden by specifying a Host entry in the additional headers section. The User-Agent can also be overridden in the same manner. If a Real Server is using adaptive scheduling, the additional headers which are specified in the health check are also sent when getting the adaptive information.

It is possible to perform a health check using an authenticated user: enable

Use HTTP/1.1, select

HEAD as the

HTTP Method and enter the username in the first

Custom Header text box, and the password in the second box.

To send SNI host information in HTTPS health checks, please enable

Use HTTP/1.1 in the

Real Servers section of the relevant Virtual Service(s) and specify a host header. If this is not set, the IP address of the Real Server will be used.

RulesIf any of the Real Servers have Content Switching rules assigned to them the

Rules column appears in the Real Servers section. A button with the number of rules assigned to each of the Real Server (or with

None if there are no rules assigned) is displayed in the

Rules column.

Clicking the button within the

Rules column opens the

Rules Management screen.

![]()

Figure 3‑29: Rules

From within this screen you can

Add or

Delete the rules assigned to a Real Server.

Binary Data Health Checking

When

Binary Data is selected as the health check method, some other fields are available, as described below.

![]()

Figure 3‑30: Binary Data health check

Data to SendSpecify a hexadecimal string to send to the Real Server.

This hexadecimal string must contain an even number of characters.

Reply PatternSpecify the hexadecimal string which will be searched for in the response sent back from the Real Server. If the LoadMaster finds this pattern in the response, the Real Server is considered up. If the string is not found, the Real Server will be marked as down.

This hexadecimal string must contain an even number of characters.

Find Match WithinWhen a response is returned, the LoadMaster will search for the

Reply Pattern in the response. The LoadMaster will search up to the number of bytes specified in this field for a match.

Setting this to

0 means that the search is not limited. Data is read from the Real Server until a match is found. A maximum of 8 KB will be read from the Real Server.

Setting the value to less than the length of the reply string means that the check will act as if the value has been set to

0, i.e. all packets (up to 8 KB) will be searched.

Add a Real Server

Clicking the

Add New button brings you to the following screen where the properties of the Real Server are set.

![]()

Figure 3‑31: Real Server Parameters

Allow Remote Addresses: By default only Real Servers on local networks can be assigned to a Virtual Service. Enabling this option will allow a non-local Real Server to be assigned to the Virtual Service.

To make the Allow Remote Addresses option visible, Enable Non-Local Real Servers must be selected (in System Configuration > Miscellaneous Options > Network Options). Also, Transparency must be disabled in the Virtual Service.

When alternative gateways/non-local Real Servers are set up, health checks are routed through the default gateway.

Real Server Address: The Real Server IP address. This is not editable when modifying a Real Server.

Port: The forwarding port of the Real Server. This field is editable, so the port may be altered later if required.

Forwarding Method: Either NAT (Network Address Translation) or Route (Direct) forwarding. The available options are dependent on the other modes selected for the service.

Weight: The Real Server's weight. This is weight of the Real Server, as used by the Weighted Round Robin, Weighted Least Connection and Adaptive scheduling methods. The default initial value for the weight is

1000, the maximum is

65535, and the minimum is

1. It is a good benchmark to give a Real Server a weight relative to its processor speed, i.e. if server1 seems to bring four times the power of server2, assign a weight of

4000 to server1 and weight of

1000 to server2.

Connection Limit: The maximum number of open connections that a Real Server will accept before it is taken out of the rotation. This is only available for Layer 7 traffic. The limit stops new connections from being created, but it will allow requests that already have persistent connections to the server.

A maximum number of 1024 Real Servers is allowed. This is the global limit and is divided among the existing Virtual Services. For example, if one Virtual Service had 1000 Real Servers, then the remaining Virtual Services can only have 24 further Real Servers in total.

For the LoadMaster Exchange, there is a limit of six Real Servers that may be configured.

Click the

Add This Real Server button and it will be added to the pool.

CriticalThis option will only appear if the Enhanced Options check box is enabled.

In the Real Servers section of the Virtual Service modify screen, there is a

Critical check box for each of the Real Servers. Enabling this option indicates that the Real Server is required for the Virtual Service to be considered available. The Virtual Service will be marked as down if the Real Server has failed or is disabled.

If a Real Server on a SubVS is marked as critical – the SubVS will be marked as down if that Real Server is down. However, the parent Virtual Service will not be marked down unless that SubVS is marked as critical.

This option overrides the Minimum number of RS required for VS to be considered up field. For example, if the minimum is set to two and only one Real Server is down but that Real Server is set to critical – the Virtual Service will be marked as down.

In all cases, if the Virtual Service is considered to be down and the Virtual Service has a sorry server or an error message configured, these will be used.

Modify a Real Server

When you click the

Modify button of a Real Server, the following options are available:

![]()

Figure 3‑32: Real Server options

Real Server AddressThis field shows the address of the Real Server. This is not an editable field.

PortThis is a field detailing the port on the Real Server that is to be used.

Forwarding MethodThis is a field detailing the type of forwarding method to be used. The default is NAT; Direct Server Return can only be used with L4 services.

WeightWhen using Weighted Round Robin Scheduling, the weight of a Real Server is used to indicate what relative proportion of traffic should be sent to the server. Servers with higher values will receive more traffic.

Connection LimitThis is the maximum amount of open connections that can be sent to the real server before it is taken out of rotation. The maximum limit is 100,000.

Manage Templates

Templates make the setting up of Virtual Services easier by automatically creating and configuring the parameters for a Virtual Service. Before a template can be used to configure a Virtual Service, it must be imported and installed on the LoadMaster.

![]()

Figure 3‑33: Manage Templates

Click the

Choose File button, select the template you wish to install and click the

Add New Template button to install the selected template. This template is now available for use when you are adding a new Virtual Service.

Click the

Delete button to remove the template.

Manage SSO Domains

Before using the Edge Security Pack (ESP) the user must first set up a Single Sign-On (SSO) Domain on the LoadMaster. The SSO Domain is a logical grouping of Virtual Services which are authenticated by an LDAP server.

The maximum number of SSO domains that are allowed is 128.

![]()

Figure 3‑34: Manage Single Sign On Options

Click the

Manage SSO Domains menu option to open the

Manage Single Sign On Options screen.

Single Sign On Domains

Two types of SSO domains can be created – client side and server side.

Client Side configurations allow you to set the

Authentication Protocol to

LDAP,

RADIUS,

RSA-SecurID,

Certificates or

RADIUS and LDAP.

Server Side configurations allow you to set the

Authentication Protocol to

Kerberos Constrained Delegation (KCD).

To add a new SSO Domain enter the name of the domain in the

Name field and click the

Add button. The name entered here does not need to relate to the allowed hosts within the Single Sign On Domain.

When using the Permitted Groups field in ESP Options, you need to ensure that the SSO domain set here is the directory for the permitted groups. For example, if the SSO Domain is set to webmail.example and webmail is not the directory for the permitted groups within example.com, it will not work. Instead, the SSO Domain needs to be set to .example.com.

If the Domain/Realm field is not set, the domain Name set when initially adding an SSO domain will be used as the Domain/Realm name.

Client Side (Inbound) SSO Domains

![]()

Figure 3‑35: Manage Domain screen

Authentication ProtocolThis dropdown allows you to select the transport protocol used to communicate with the authentication server. The options are:

- LDAP

- RADIUS

- RSA-SecurID

- Certificates

- RADIUS and LDAP

The fields displayed on this screen will change depending on the Authentication protocol selected.

LDAP Configuration TypeSelect the type of LDAP configuration. The options are:

This option is only available if the Authentication Protocol is set to LDAP.

RADIUS and LDAP Configuration TypeSelect the type of RADIUS and LDAP configuration. The options are:

- RADIUS and Unencrypted LDAP

- RADIUS and StartTLS LDAP

- RADIUS and LDAPS

This option is only available if the Authentication Protocol is set to RADIUS and LDAP.

LDAP/RADIUS/RSA-SecurID Server(s)Type the IP addresses of the server or servers which will be used to authenticate the domain into the server(s) field and click the set server(s) button.

Multiple server addresses can be entered within this text box. Each entry must be separated by a space.

RADIUS Shared SecretThe shared secret to be used between the RADIUS server and the LoadMaster.

This field will only be available if the Authentication Protocol is set to RADIUS or RADIUS and LDAP.

LDAP Administrator and

LDAP Administrator PasswordThese text boxes are only visible when the Authentication Protocol is set to Certificates.

These details are used to check the LDAP database to determine if a user from the certificate exists.

Check Certificate to User MappingThis option is only available when the

Authentication Protocol is set to

Certificates. When this option is enabled - in addition to checking the validity of the client certificate, the client certificate will also be checked against the altSecurityIdentities (ASI) attribute of the user on the Active Directory.

If this option is enabled and the check fails, the login attempt will fail. If this option is not enabled, only a valid client certificate (with the username in the SubjectAltName (SAN)) is required to log in, even if the altSecurityIdentities attribute for the user is not present or not matching.

Domain/RealmThe login domain to be used. This is also used with the logon format to construct the normalized username, for example;

- Principalname:@

- Username:\

If the Domain/Realm field is not set, the Domain name set when initially adding an SSO domain will be used as the Domain/Realm name.

RSA Authentication Manager Config FileThis file needs to be exported from the RSA Authentication Manager.

RSA Node Secret FileA node secret must be generated and exported in the RSA Authentication Manager.

It is not possible to upload the RSA node secret file until the RSA Authentication Manager configuration file is uploaded. The node secret file is dependent on the configuration file.

Logon FormatThis drop-down list allows you to specify the format of the login information that the client has to enter.

The options available vary depending upon which Authentication Protocol is selected.

Not Specified: The username will have no normalization applied to it - it will be taken as it is typed.

Principalname: Selecting this as the