In this multi-part article we will migrate from Exchange 2010 to Exchange 2016. We’ll now create our first volume for the Page File. In our design, this is not to be located on a mount point, so we don’t need to create a folder structure to support it.

If you would like to read the other parts in this article series please go to:

Figure 1: Creating a new volume for the page file

The New Simple Volume Wizard will launch. We’ll be provided with the opportunity to assign our drive letter, mount in an empty folder (which we will use for the database and log volumes) or not to assign a drive letter or path. We’ll choose a drive letter, in this case, D:

![]()

Figure 2: Assigning a drive letter to our page file disk

After choosing the drive letter, we’ll then move on to formatting our first disk.

Figure 3: Formatting our page file disk

After formatting the page file volume, we will format and mount our database and log volumes.

The process to create the ReFS volume with the correct settings requires PowerShell.

An example function is shown below that we will use to create the mount point, create a partition and format the volume with the right setting.

Check and alter the script for your needs. To use the function, paste the script into a PowerShell prompt. The new function will be available as a cmdlet, Format-ExchangeDisk.

Before using the script we need to know which disks to format. In Disk Management examine the list of disks. We’ll see the first one to format as ReFS is Disk 2:

Figure 4: Checking the first disk number to use for Exchange data

Format the disk using the PowerShell function we’ve created above:

Figure 5: Formatting an Exchange data disk using ReFS

After formatting all disks, they should show with correct corresponding labels:

Figure 6: Viewing disks after formatting as ReFS

To configure the Page file size, right click on the Start Menu and choose System:

Figure 7: Accessing system settings

The system information window should open within the control panel. Choose Advanced system settings, as shown below:

Figure 8: Navigating to Advanced system settings

Next, the System Properties window will appear with the Advanced tab selected. Within Performance, choose Settings:

Figure 9: Opening Performance settings

We will then adjust the Virtual Memory settings and perform the following actions:

Figure 10: Configuring the page file size

After making this change you may be asked to reboot.

You don’t need to do so at this stage as we will be installing some pre-requisites to support the Exchange installation.

After installation of the components a reboot is required before we can install the other pre-requisites needed for Exchange 2016 installation.

First we’ll install the .Net Framework 4.5.2.

Figure 11: Installing .Net Framework 4.5.2

Next, install the Microsoft Unified Communications Managed API Core Runtime, version 4.0.

After download, launch the installer. After copying a number of files required, the installer provides information about the components it will install as part of the Core Runtime setup:

Figure 12: Installing the Unified Comms Managed API

No special configuration is needed after install as it’s a supporting component used by Unified Messaging.

Our final pre-requisite is to download and extract the Exchange 2016 installation files themselves.

At the time of writing, the latest version of Exchange 2016 is the RTM version.

Note that because each Cumulative Update and Service Pack for Exchange 2016, you do not need to install the RTM version and update if a CU/SP has been released. Download the latest version available.

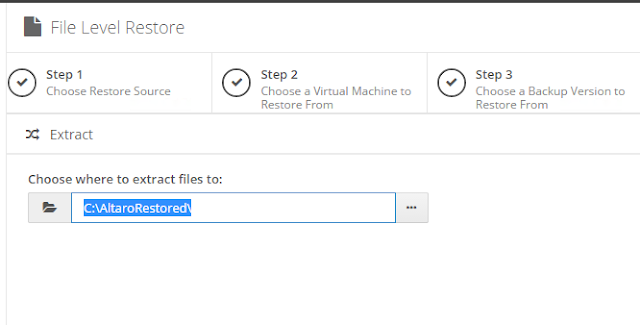

After download, run the self-extracting executable and choose an appropriate location to extract files to:

Figure 13: Extracting the files for Exchange 2016

The default installation location for Exchange 2016 is within C:\Program Files\Microsoft\Exchange Server\V15.

While logged on as a domain user that's a member of the Enterprise Admins and Schema Admins, launch an elevated command prompt and change directory into the location we've extracted the Exchange setup files, C:\Exchange2016.

Execute setup.exe with the following switches to prepare the Active Directory schema:

![]()

Figure 14: Preparing the schema for Exchange 2016

Expect the schema update to take between 5 and 15 minutes to execute.

Next prepare Active Directory. This will prepare the Configuration Container of our Active Directory forest, upgrading the AD objects that support the Exchange Organization. We'll perform this preparation using the following command:

Figure 15: Preparing Active Directory for Exchange 2016

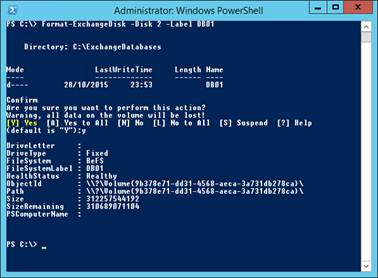

Our final step to prepare Active Directory is to run the domain preparation.

Our smaller organization is comprised of a single domain, and therefore we can run the following command:

Figure 16: Preparing the domain for Exchange 2016

If you have more than one domain within the same Active Directory forest with mail-enabled users, then you will need to prepare each domain. The easiest way to prepare multiple domains is to replace the /PrepareDomain switch with /PrepareAllDomains.

In addition to the /Mode switch we need to specify the role that we’ll install, the Mailbox role.

Figure 17: Installing Exchange 2016 server

After a successful installation, reboot the server.

If you would like to read the other parts in this article series please go to:

If you would like to read the other parts in this article series please go to:

- How to Migrate from Exchange 2010 to Exchange 2016 (Part 1)

- How to Migrate from Exchange 2010 to Exchange 2016 (Part 3)

- How to Migrate from Exchange 2010 to Exchange 2016 (Part 4)

Preparing the server for Exchange 2016

Configuring disks

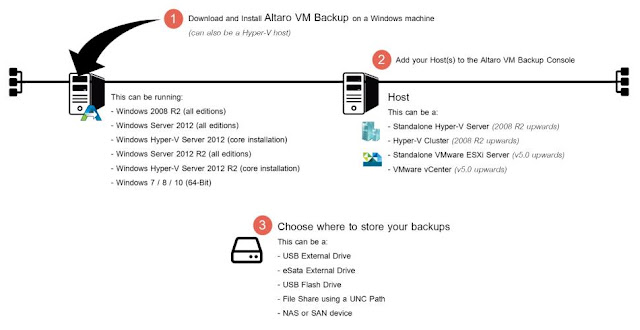

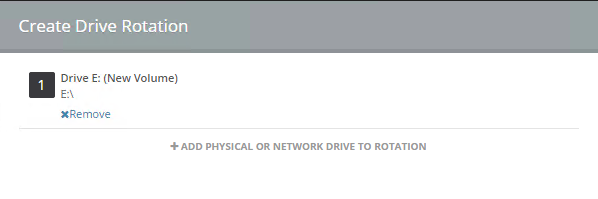

We’ll now create our first volume for the Page File. In our design, this is not to be located on a mount point, so we don’t need to create a folder structure to support it. We can simple right click and choose New Simple Volume:Figure 1: Creating a new volume for the page file

The New Simple Volume Wizard will launch. We’ll be provided with the opportunity to assign our drive letter, mount in an empty folder (which we will use for the database and log volumes) or not to assign a drive letter or path. We’ll choose a drive letter, in this case, D:

Figure 2: Assigning a drive letter to our page file disk

After choosing the drive letter, we’ll then move on to formatting our first disk.

Figure 3: Formatting our page file disk

After formatting the page file volume, we will format and mount our database and log volumes.

The process to create the ReFS volume with the correct settings requires PowerShell.

An example function is shown below that we will use to create the mount point, create a partition and format the volume with the right setting.

| function Format-ExchangeDisk { param($Disk, $Label, $BaseDirectory="C:\ExchangeDatabases") New-Item -ItemType Directory -Path "$($BaseDirectory)\$($Label)" $Partition = Get-Disk -Number $Disk | New-Partition -UseMaximumSize if ($Partition) { $Partition | Format-Volume -FileSystem ReFS -NewFileSystemLabel $Label -SetIntegrityStreams:$False $Partition | Add-PartitionAccessPath -AccessPath "$($BaseDirectory)\$($Label)" } } |

Check and alter the script for your needs. To use the function, paste the script into a PowerShell prompt. The new function will be available as a cmdlet, Format-ExchangeDisk.

Before using the script we need to know which disks to format. In Disk Management examine the list of disks. We’ll see the first one to format as ReFS is Disk 2:

Figure 4: Checking the first disk number to use for Exchange data

Format the disk using the PowerShell function we’ve created above:

Figure 5: Formatting an Exchange data disk using ReFS

After formatting all disks, they should show with correct corresponding labels:

Figure 6: Viewing disks after formatting as ReFS

Configuring Page file sizes

Page file sizes for each Exchange Server must be configured correctly. Each server should have the page file configured to be the amount of RAM, plus 10MB, up to a maximum of 32GB + 10MB.To configure the Page file size, right click on the Start Menu and choose System:

Figure 7: Accessing system settings

The system information window should open within the control panel. Choose Advanced system settings, as shown below:

Figure 8: Navigating to Advanced system settings

Next, the System Properties window will appear with the Advanced tab selected. Within Performance, choose Settings:

Figure 9: Opening Performance settings

We will then adjust the Virtual Memory settings and perform the following actions:

- Unselect Automatically manage paging file size for all drives

- Set a page file size to match the current virtual machine RAM, plus 10MB, for example:

- 8GB RAM = 8192MB RAM = 8202MB page file

- 16GB RAM = 16384MB RAM = 16394MB page file

Figure 10: Configuring the page file size

After making this change you may be asked to reboot.

You don’t need to do so at this stage as we will be installing some pre-requisites to support the Exchange installation.

Configuring Exchange 2016 prerequisites

To install the pre-requisites, launch an elevated PowerShell prompt, and execute the following command:| Install-WindowsFeature AS-HTTP-Activation, Desktop-Experience, NET-Framework-45-Features, RPC-over-HTTP-proxy, RSAT-Clustering, RSAT-Clustering-CmdInterface, RSAT-Clustering-Mgmt, RSAT-Clustering-PowerShell, Web-Mgmt-Console, WAS-Process-Model, Web-Asp-Net45, Web-Basic-Auth, Web-Client-Auth, Web-Digest-Auth, Web-Dir-Browsing, Web-Dyn-Compression, Web-Http-Errors, Web-Http-Logging, Web-Http-Redirect, Web-Http-Tracing, Web-ISAPI-Ext, Web-ISAPI-Filter, Web-Lgcy-Mgmt-Console, Web-Metabase, Web-Mgmt-Console, Web-Mgmt-Service, Web-Net-Ext45, Web-Request-Monitor, Web-Server, Web-Stat-Compression, Web-Static-Content, Web-Windows-Auth, Web-WMI, Windows-Identity-Foundation, RSAT-ADDS |

After installation of the components a reboot is required before we can install the other pre-requisites needed for Exchange 2016 installation.

First we’ll install the .Net Framework 4.5.2.

Figure 11: Installing .Net Framework 4.5.2

Next, install the Microsoft Unified Communications Managed API Core Runtime, version 4.0.

After download, launch the installer. After copying a number of files required, the installer provides information about the components it will install as part of the Core Runtime setup:

Figure 12: Installing the Unified Comms Managed API

No special configuration is needed after install as it’s a supporting component used by Unified Messaging.

Our final pre-requisite is to download and extract the Exchange 2016 installation files themselves.

At the time of writing, the latest version of Exchange 2016 is the RTM version.

Note that because each Cumulative Update and Service Pack for Exchange 2016, you do not need to install the RTM version and update if a CU/SP has been released. Download the latest version available.

After download, run the self-extracting executable and choose an appropriate location to extract files to:

Figure 13: Extracting the files for Exchange 2016

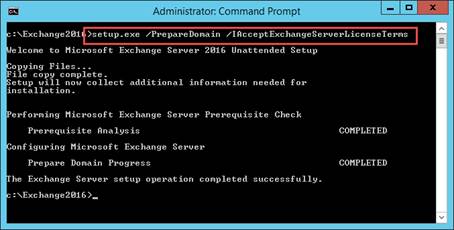

Installing Exchange Server 2016

We will install Exchange Server 2016 via the command line. It’s also possible to perform the setup using the GUI, however the command line options allow us to perform each critical component, such as schema updates, step-by-step.Installation Locations

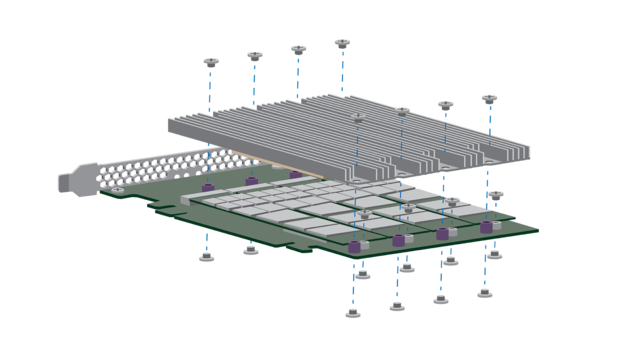

As recommended by the Exchange 2016 Role Requirements Calculator, we will be placing the Transport Database - the part of Exchange that temporarily stores in-transit messages - on the system drive, therefore it makes a lot of sense to use the default locations for Exchange installation.The default installation location for Exchange 2016 is within C:\Program Files\Microsoft\Exchange Server\V15.

Preparing Active Directory

Our first part of the Exchange 2016 installation is to perform the Schema update. This step is irreversible; therefore, it is essential that a full backup of Active Directory is performed before we perform this step.While logged on as a domain user that's a member of the Enterprise Admins and Schema Admins, launch an elevated command prompt and change directory into the location we've extracted the Exchange setup files, C:\Exchange2016.

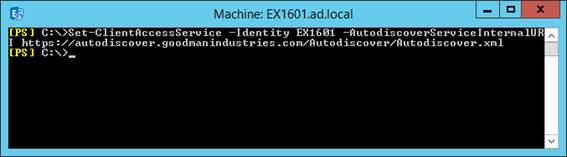

Execute setup.exe with the following switches to prepare the Active Directory schema:

| setup.exe /PrepareSchema /IAcceptExchangeServerLicenseTerms |

Figure 14: Preparing the schema for Exchange 2016

Expect the schema update to take between 5 and 15 minutes to execute.

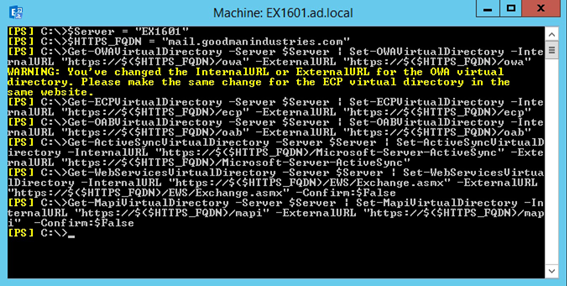

Next prepare Active Directory. This will prepare the Configuration Container of our Active Directory forest, upgrading the AD objects that support the Exchange Organization. We'll perform this preparation using the following command:

| setup.exe /PrepareAD /IAcceptExchangeServerLicenseTerms |

Our final step to prepare Active Directory is to run the domain preparation.

Our smaller organization is comprised of a single domain, and therefore we can run the following command:

| setup.exe /PrepareDomain /IAcceptExchangeServerLicenseTerms |

If you have more than one domain within the same Active Directory forest with mail-enabled users, then you will need to prepare each domain. The easiest way to prepare multiple domains is to replace the /PrepareDomain switch with /PrepareAllDomains.

Performing Exchange 2016 Setup

To install Exchange 2016 via setup.exe we will use the /Mode switch to specify that we will be performing an Install.In addition to the /Mode switch we need to specify the role that we’ll install, the Mailbox role.

| setup.exe /Mode:Install /Roles:Mailbox /IAcceptExchangeServerLicenseTerms |

After a successful installation, reboot the server.

Summary

In part two of this series we have completed the server preparation and then installed Exchange Server 2016. In the next part of this series we will perform post installation checks and configuration.If you would like to read the other parts in this article series please go to:

- How to Migrate from Exchange 2010 to Exchange 2016 (Part 1)

- How to Migrate from Exchange 2010 to Exchange 2016 (Part 3)

- How to Migrate from Exchange 2010 to Exchange 2016 (Part 4)

then select

then select

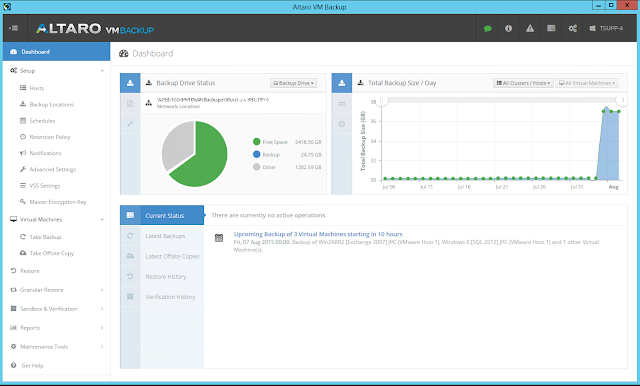

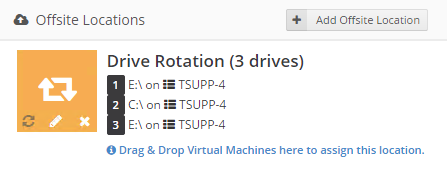

icon appears in your Backup Schedule tile indicating that an offsite copy is configured to run along with this schedule:

icon appears in your Backup Schedule tile indicating that an offsite copy is configured to run along with this schedule:

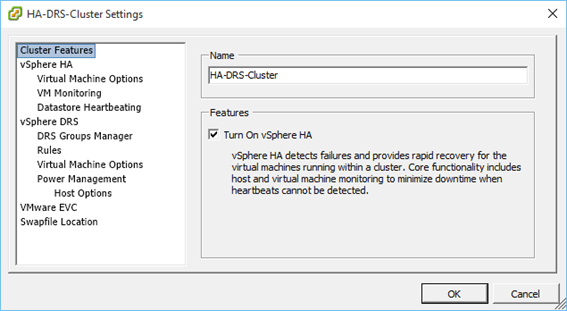

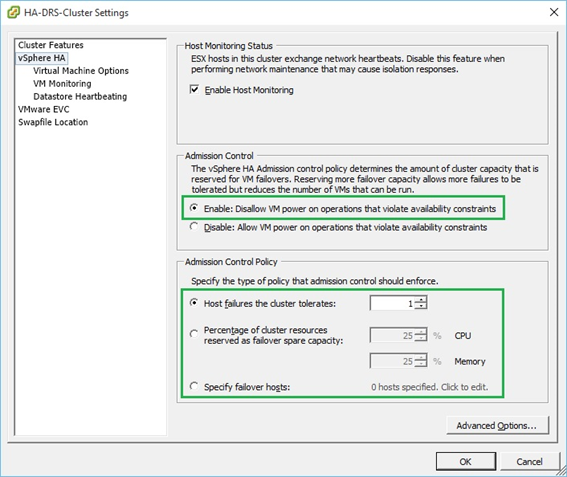

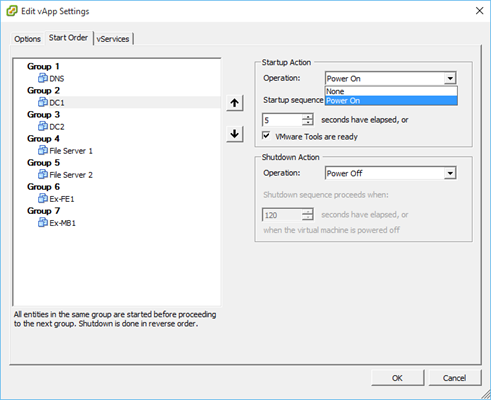

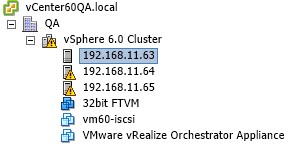

Figure 2 – Enabling DRS on a cluster (using the C# vSphere Client)

Figure 2 – Enabling DRS on a cluster (using the C# vSphere Client)

Figure 4 – Manually running DRS

Figure 4 – Manually running DRS

Figure 11 – DRS status window

Figure 11 – DRS status window

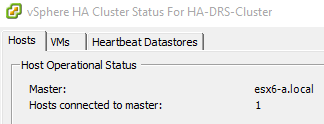

Figure 1 – HA election in progress

Figure 1 – HA election in progress

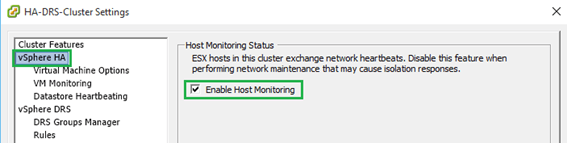

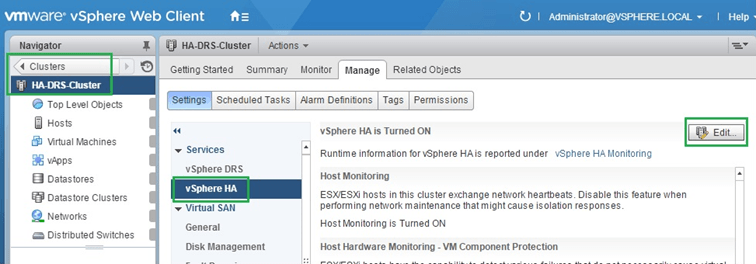

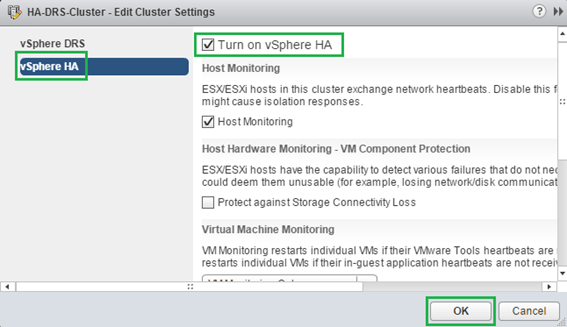

Figure 5 – Turning on HA and Host monitoring on a cluster (using the vSphere Web Client)

Figure 5 – Turning on HA and Host monitoring on a cluster (using the vSphere Web Client)

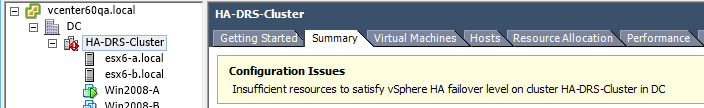

Figure 7- HA insufficient resources warning

Figure 7- HA insufficient resources warning

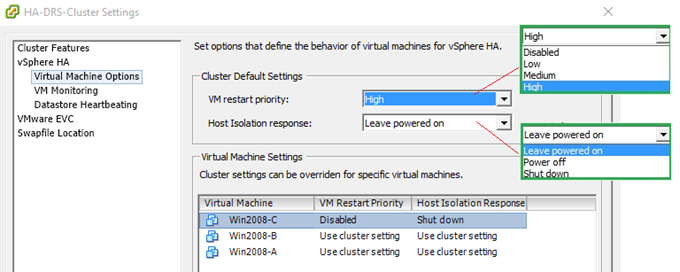

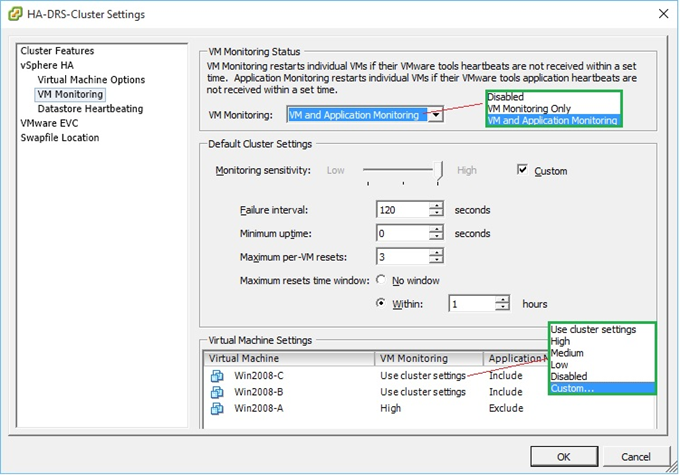

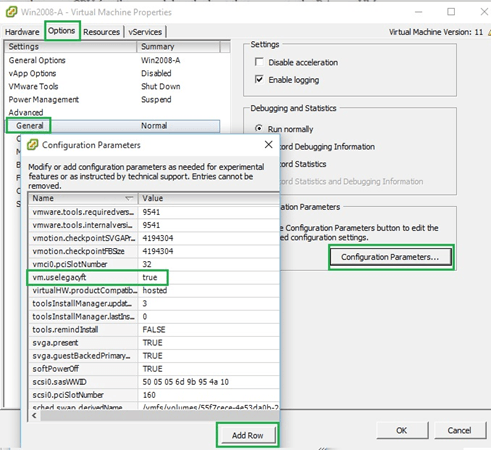

Figure 9 – Setting virtual machines options

Figure 9 – Setting virtual machines options

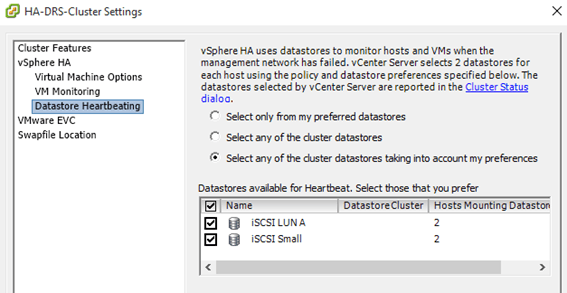

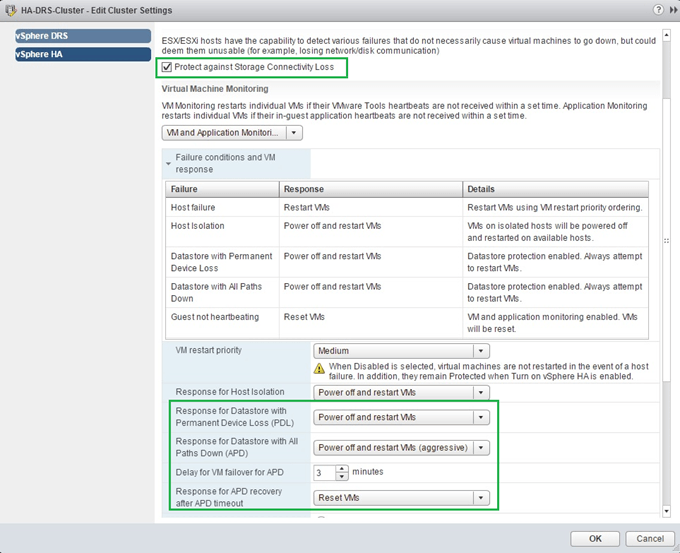

Figure 11 – Configuring PDL and APD settings using the vSphere Web Client

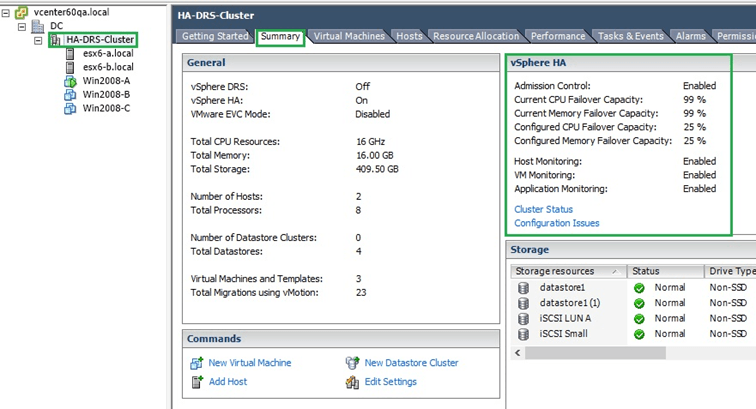

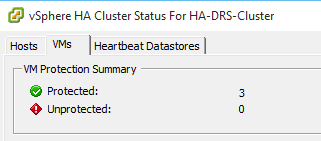

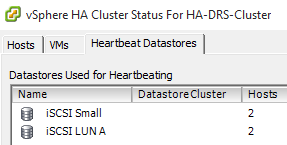

Figure 11 – Configuring PDL and APD settings using the vSphere Web Client Figure 12 – HA metrics and status window

Figure 12 – HA metrics and status window

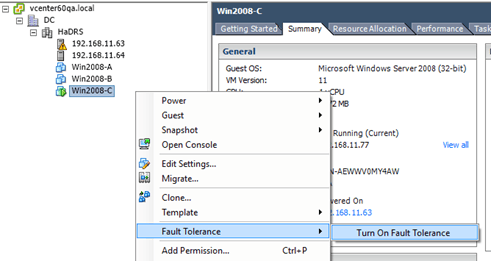

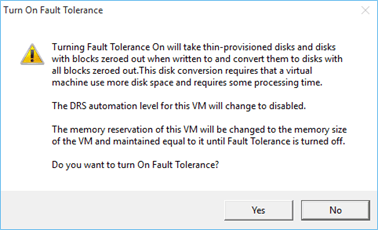

Figure 6 – vmdk scrubbing – changing provisioning type to thick eager zeroed

Figure 6 – vmdk scrubbing – changing provisioning type to thick eager zeroed

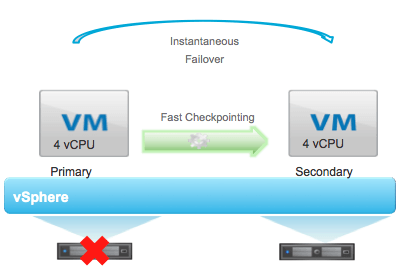

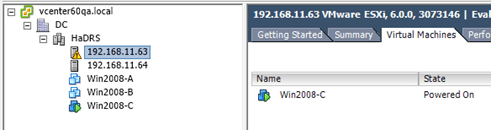

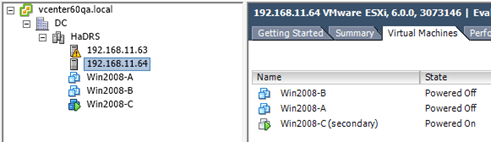

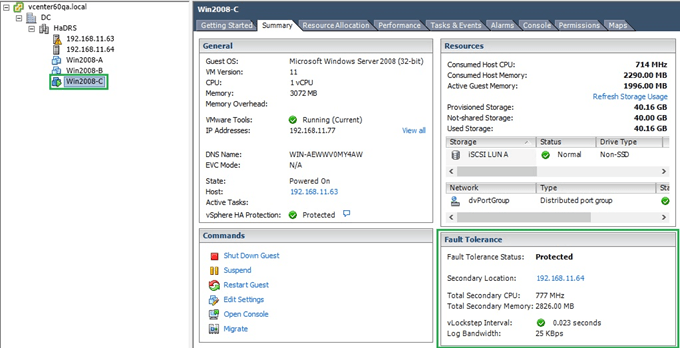

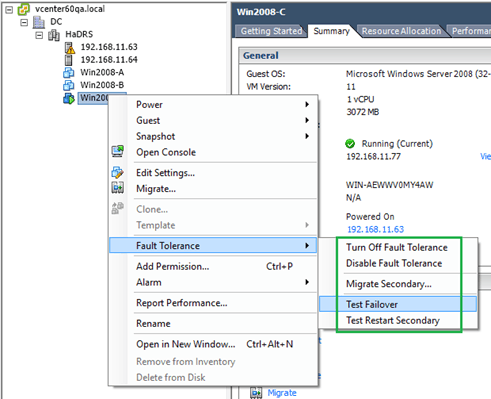

Figure 9 – Fault Tolerance details for a FT protect virtual machine

Figure 9 – Fault Tolerance details for a FT protect virtual machine

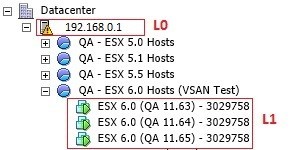

Figure 2 – Nested Hypervisors

Figure 2 – Nested Hypervisors

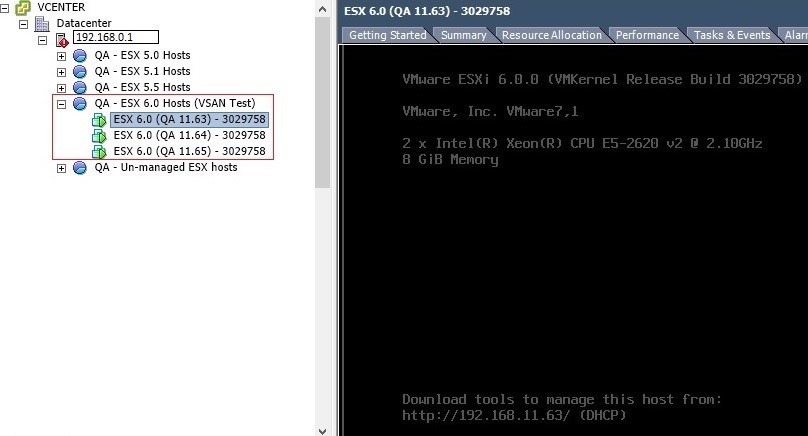

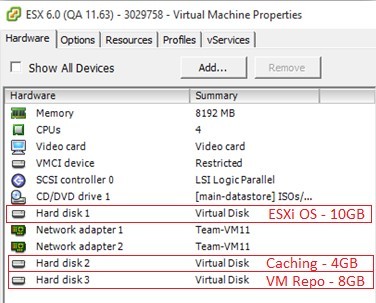

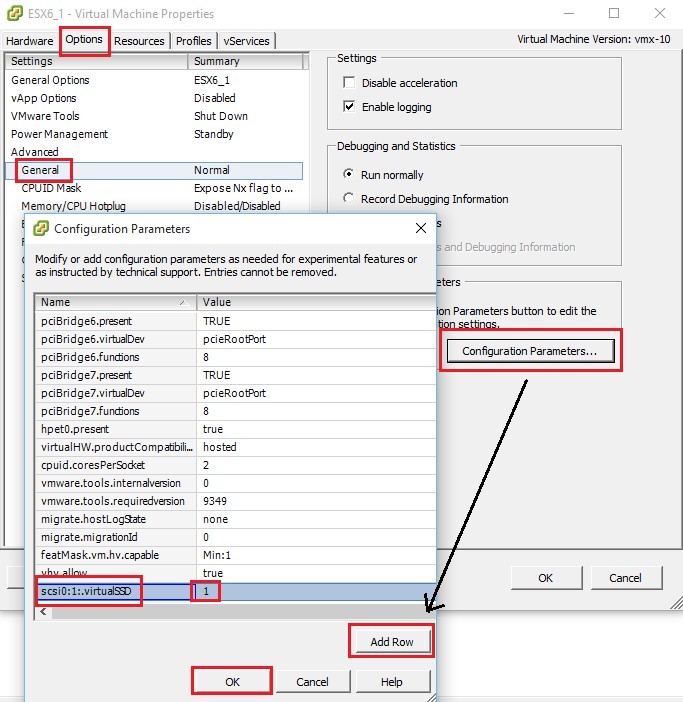

Figure 5 – Emulating an SSD drive Each host has a total of 3 drives, one for the ESXi OS, a second for caching and a third one acting as a repository for the virtual machines we eventually deploy. The hard drive capacities I chose are all arbitrary and should by no means be used for production environments.

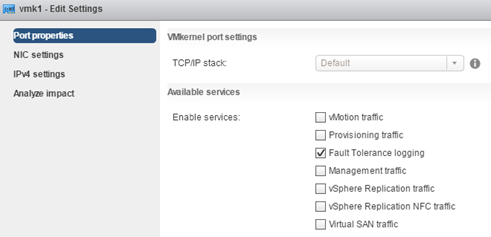

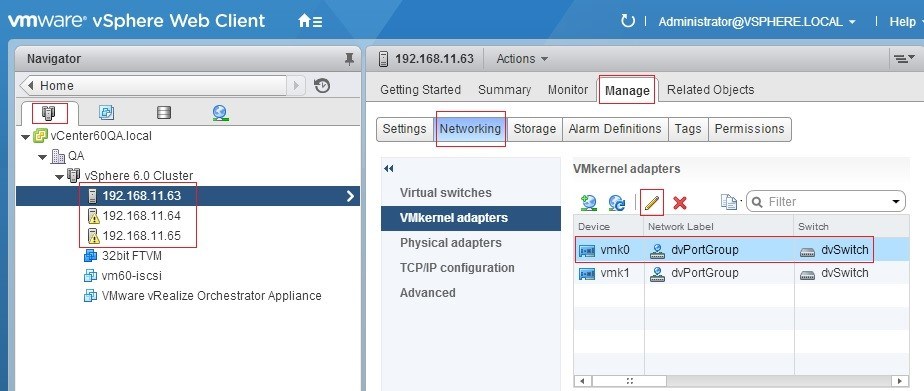

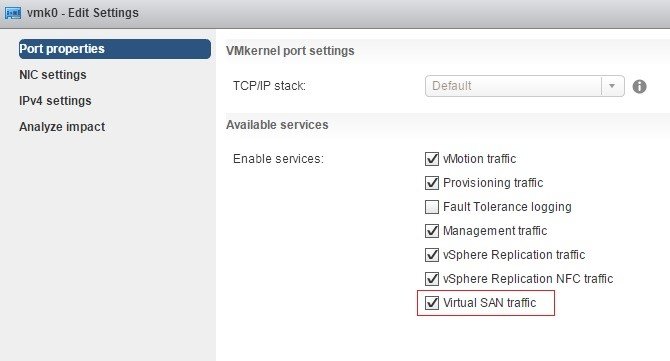

Figure 5 – Emulating an SSD drive Each host has a total of 3 drives, one for the ESXi OS, a second for caching and a third one acting as a repository for the virtual machines we eventually deploy. The hard drive capacities I chose are all arbitrary and should by no means be used for production environments. Figure 6 – VMkernel settings

Figure 6 – VMkernel settings Figure 7 – Allowing VSAN traffic through

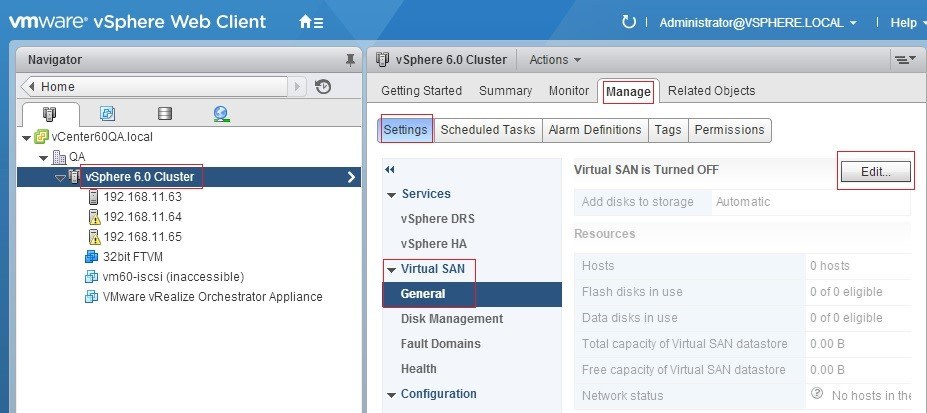

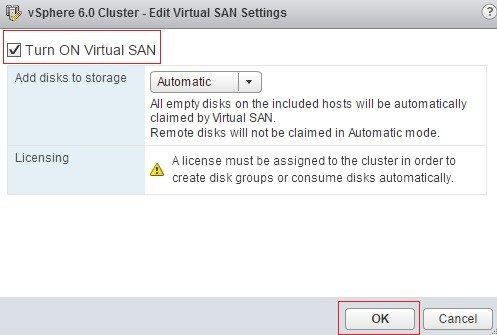

Figure 7 – Allowing VSAN traffic through Figure 8 – Provisioning VSAN

Figure 8 – Provisioning VSAN

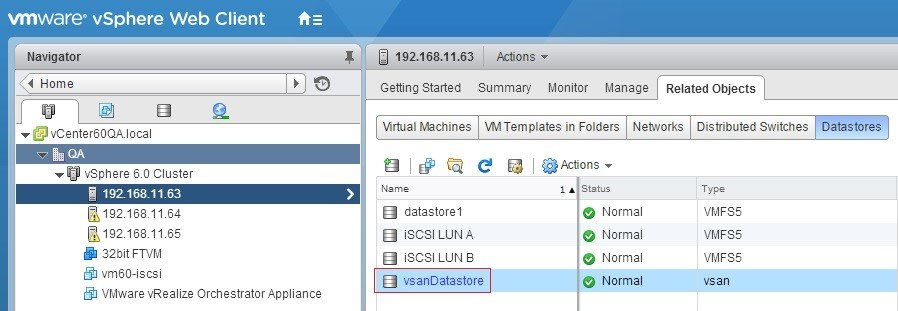

Figure 10 – VSAN datastore

Figure 10 – VSAN datastore

Figure 1 – Ephemeral port binding requirement

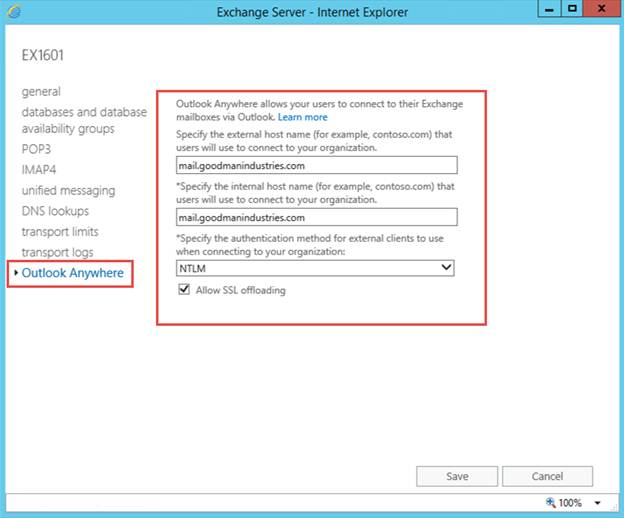

Figure 1 – Ephemeral port binding requirement