Unpack Files

unzip linuxamd64_12102_database_1of2.zip

unzip linuxamd64_12102_database_2of2.zip

You should now have a single directory called "database" containing installation files.

Hosts File

The "/etc/hosts" file must contain a fully qualified name for the server.

For example.

127.0.0.1 localhost.example.com localhost

192.168.56.141 fedora25.example.com fedora25

Set Kernel Parameters

Create a file called "/etc/sysctl.d/98-oracle.conf" with the following contents.

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

Run the following command to change the current kernel parameters.

/sbin/sysctl -p

Add the following lines to the "/etc/security/limits.conf" file.

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft stack 10240

oracle hard stack 32768

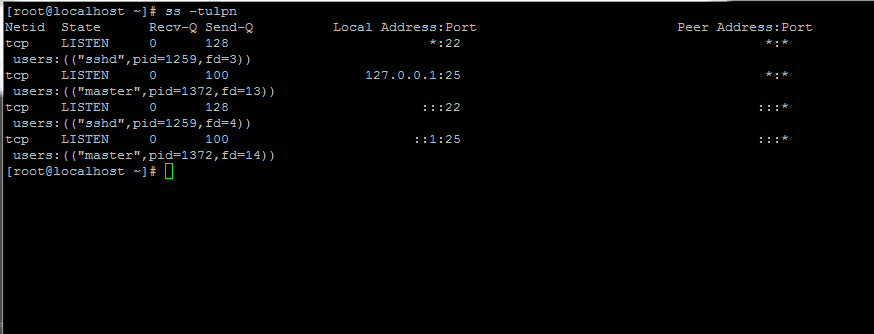

Stop and disable the firewall. You can configure it later if you wish.

systemctl stop firewalld

systemctl disable firewalld

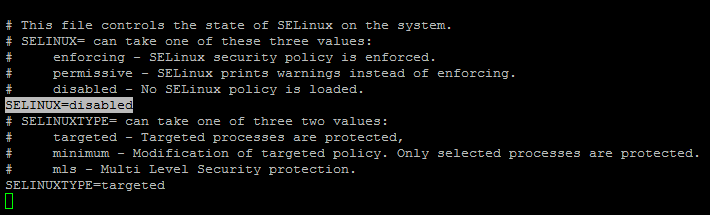

Set SELinux to permissive by editing the "/etc/selinux/config" file, making sure the SELINUX flag is set as follows.

SELINUX=permissive

The server will need a reboot for the change to take effect.

Setup

Before we consider the packages required by the Oracle installation, it's probably worth making sure some basic package groups are installed.

dnf groupinstall "MATE Desktop" -y

dnf groupinstall "Development Tools" -y

dnf groupinstall "Administration Tools" -y

dnf groupinstall "System Tools" -y

dnf install firefox -y

If you have installed the suggested package groups, the majority of the necessary packages will already be installed. The following packages are listed as required, including the 32-bit version of some of the packages. Many of the packages should be installed already.

dnf install binutils -y

dnf install compat-libstdc++-33 -y

dnf install compat-libstdc++-33.i686 -y

dnf install gcc -y

dnf install gcc-c++ -y

dnf install glibc -y

dnf install glibc.i686 -y

dnf install glibc-devel -y

dnf install glibc-devel.i686 -y

dnf install ksh -y

dnf install libgcc -y

dnf install libgcc.i686 -y

dnf install libstdc++ -y

dnf install libstdc++.i686 -y

dnf install libstdc++-devel -y

dnf install libstdc++-devel.i686 -y

dnf install libaio -y

dnf install libaio.i686 -y

dnf install libaio-devel -y

dnf install libaio-devel.i686 -y

dnf install libXext -y

dnf install libXext.i686 -y

dnf install libXtst -y

dnf install libXtst.i686 -y

dnf install libX11 -y

dnf install libX11.i686 -y

dnf install libXau -y

dnf install libXau.i686 -y

dnf install libxcb -y

dnf install libxcb.i686 -y

dnf install libXi -y

dnf install libXi.i686 -y

dnf install make -y

dnf install sysstat -y

dnf install unixODBC -y

dnf install unixODBC-devel -y

dnf install zlib-devel -y

Create the new groups and users.

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

#groupadd -g 54324 backupdba

#groupadd -g 54325 dgdba

#groupadd -g 54326 kmdba

#groupadd -g 54327 asmdba

#groupadd -g 54328 asmoper

#groupadd -g 54329 asmadmin

useradd -u 54321 -g oinstall -G dba,oper oracle

passwd oracle

We are not going to use the extra groups, but include them if you do plan on using them.

Create the directories in which the Oracle software will be installed.

mkdir -p /u01/app/oracle/product/12.1.0.2/db_1

chown -R oracle:oinstall /u01

chmod -R 775 /u01

Putting mount points directly under root is typically a bad idea. It's done here for simplicity, but for a real installation "/" should be reserved for the OS.

If you are using X Emulation, login as root and issue the following command.

xhost +

Edit the "/etc/redhat-release" file replacing the current release information "Fedora release 25" with the following.

redhat release 7

Login as the oracle user and add the following lines at the end of the "/home/oracle/.bash_profile" file.

# Oracle Settings

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=fedora25.localdomain

export ORACLE_UNQNAME=cdb1

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/12.1.0.2/db_1

export ORACLE_SID=cdb1

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

Installation

Log into the oracle user. If you are using X emulation then set the DISPLAY environmental variable.

DISPLAY=:0.0; export DISPLAY

Start the Oracle Universal Installer (OUI) by executing the following command in the database directory.

./runInstaller

Proceed with the installation of your choice. Ignore any warnings about the system configuration.

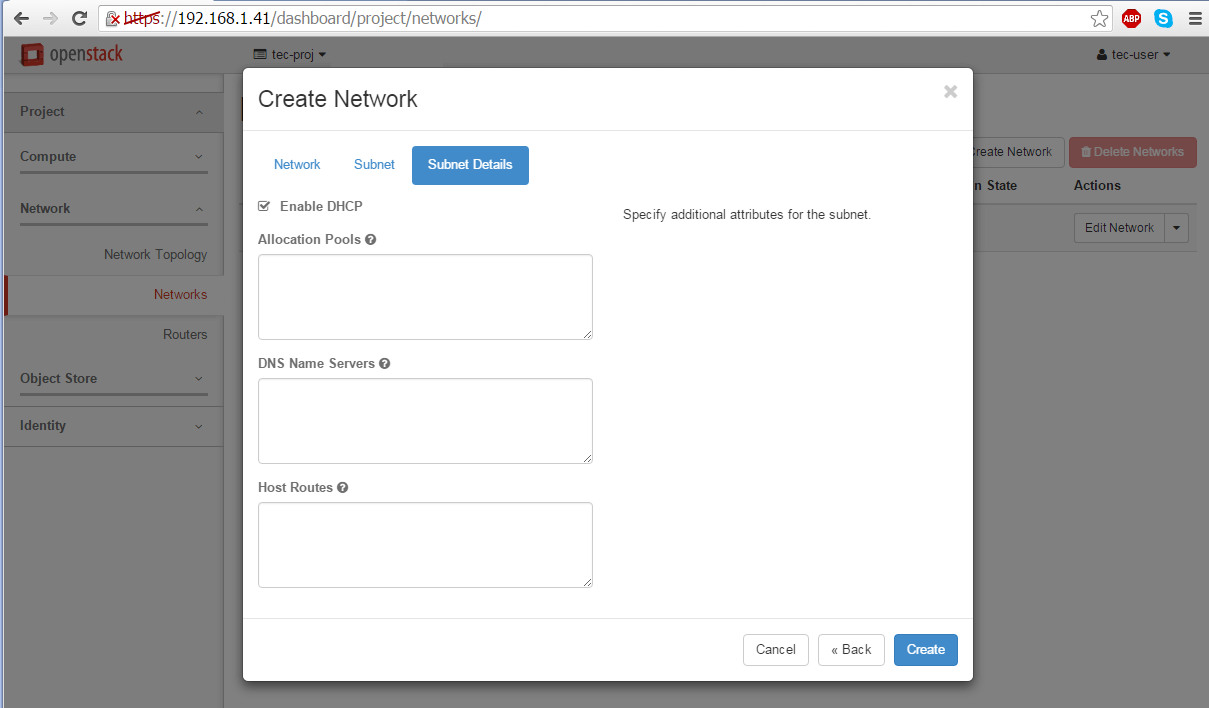

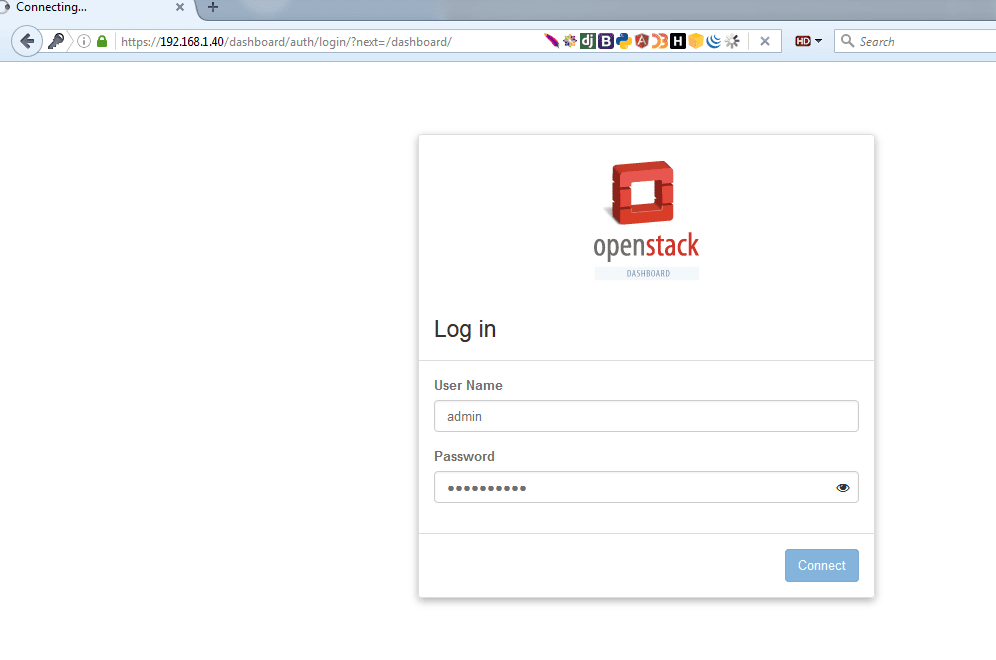

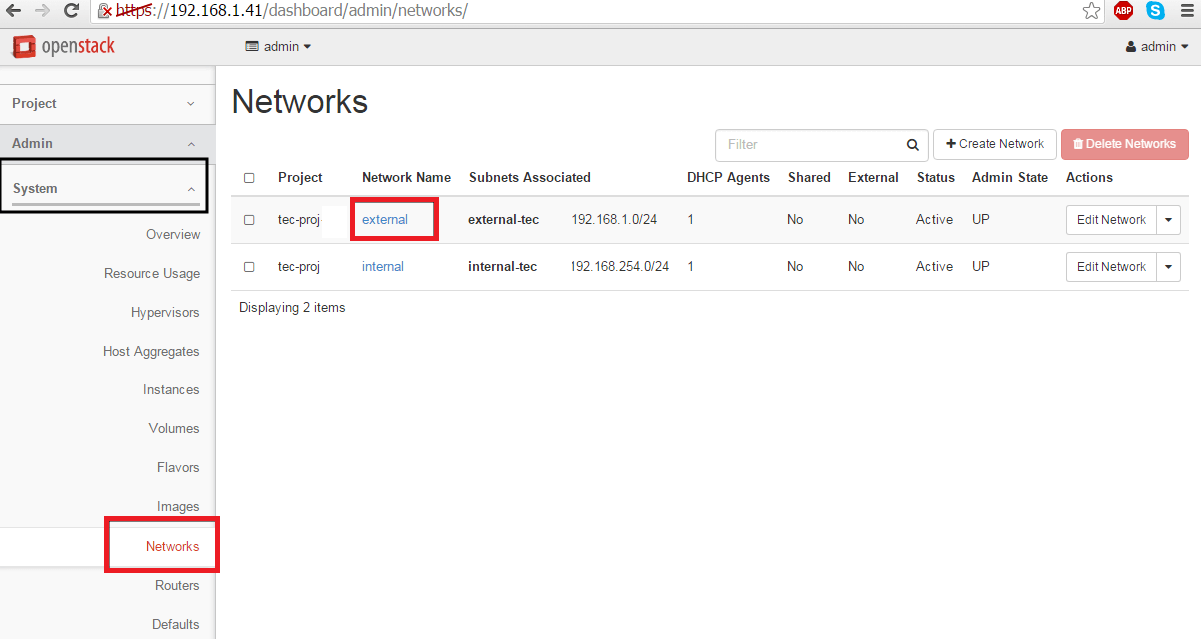

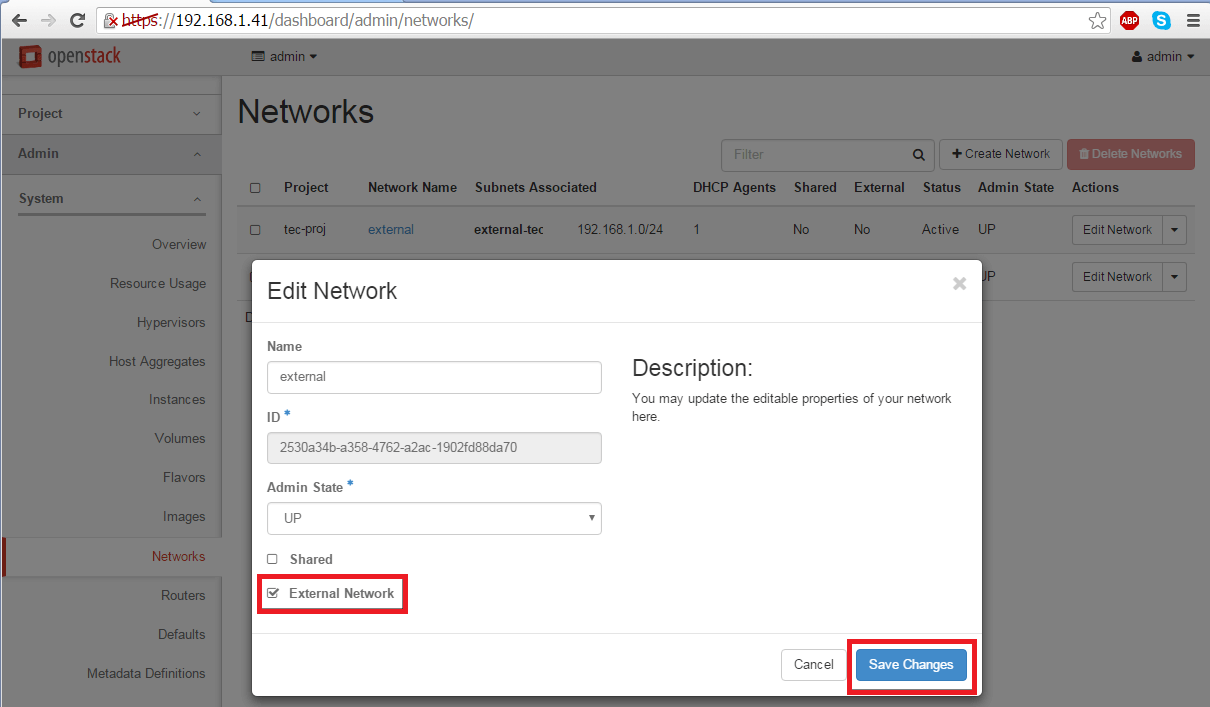

You can see the following screenshots for reference and type of installation we performed.

My Oracle Support Credentials error because i don't use it. Click Yes

Database Configuration Assistant Completed. Note down the highlighted url and Click OK

Open up your favorite web browser and access the above noted url.

Database Express 12c Login screen presented. Login with username and password you specify in above step.

Database Express 12c Dashboard screen presented.

Installation Problems

If you are doing this installation in a VM on a Mac/PC with a new-ish chipset, you may encounter some issues, especially around the Perl installation. If so, check out these notes.

During the linking phase, you may see the following error.

Error in invoking target 'irman ioracle' of makefile '/u01/app/oracle/product/12.1.0.2/db_1/rdbms/lib/ins_rdbms.mk'

To fix it, run the following command as the "oracle" user, then click the "Retry" button.

cp $ORACLE_HOME/javavm/jdk/jdk6/lib/libjavavm12.a $ORACLE_HOME/lib/

During the database creation as part of the installation, or after when using the DBCA, you may get the following error.

Error while executing "/u01/app/oracle/product/12.1.0.2/db_1/rdbms/admin/dbmssml.sql". Refer to "/u01/app/oracle/cfgtoollogs/dbca/orcl/dbmssml0.log" for more details. Error in Process: /u01/app/oracle/product/12.1.0.2/db_1/perl/bin/perl

To fix it, follow the instructions to rebuild Perl as described towards the end of this post by

Laurent Leturgez. You will have to redo the database creation.

Post Installation

Edit the "/etc/redhat-release" file restoring the original release information.

Fedora release 25

Edit the "/etc/oratab" file setting the restart flag for each instance to 'Y'.

cdb1:/u01/app/oracle/product/12.1.0.2/db_1:Y